I’ve written before that AI is Fundamentally Unintelligent. I’ve also written that AI is Slowing Down (part 2 here). One would perhaps consider me a pessimist or cynic of AI if I were to write another post criticising AI from another perspective. But please don’t think ill of me as I embark on exactly such an endeavour. I love AI and always will, which is why most of my posts show AI and its achievements in a positive light (my favourite post is probably on the exploits of GPT-3).

But AI is not only fundamentally unintelligent but at the moment it is fundamentally over-hyped. And somebody has to say it. So, here goes.

When new disruptive technological innovations hit the mainstream, hype inadvertently follows. This seems to be human nature. We saw this with the dot-com bubble, we saw it with Bitcoin and co. in 2017, we saw it with Virtual Reality in around 2015 (although, purportedly, VR is on the rise again – although I’m yet to be convinced about its touted potential success), and likewise with 3D glasses and 3D films at around the same time. The mirages come and then dissipate.

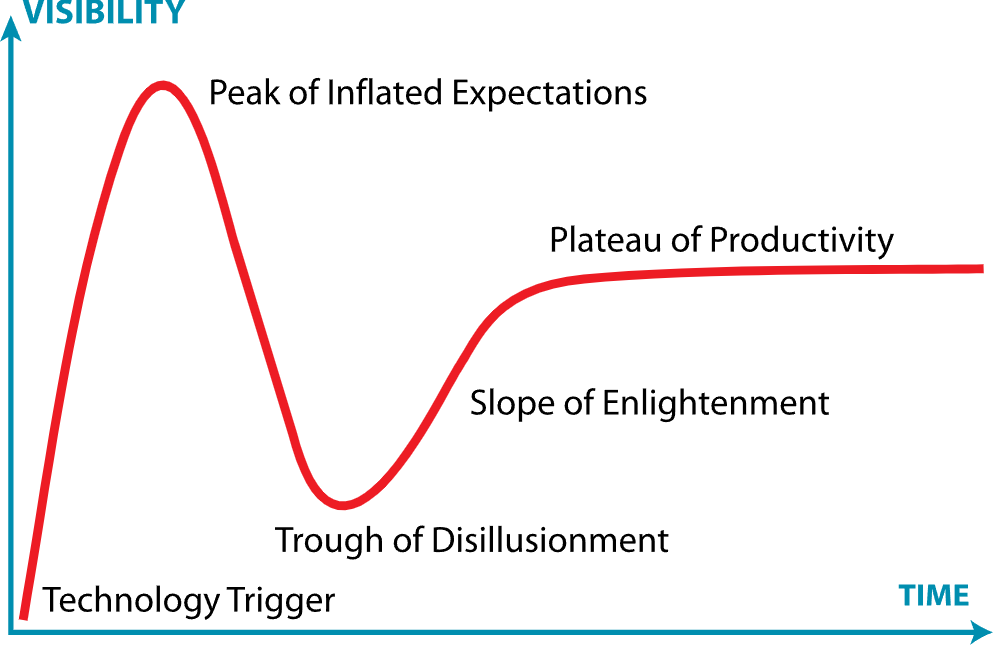

The common trend of hype and delusion that tends to follow exceptional growth and success of a technological innovation is such a common occurrence that people have come up with ways to describe it. Indeed, Gartner, the American research, advisory and information technology firm, developed a graphical representation of this phenomenon. They call it the “Gartner Hype Cycle”, and it is portrayed in the image below:

It is my opinion that we have just passed the initial crest and are slowly learning that our idea of AI has not lived up to expectations. A vast number of projects are failing today that were deemed to initially be sound undertakings. Some projects are failing so badly that the common, average person when hearing of them turns to common sense and wonders why it has been abandoned by the seemingly bright minds of the world.

Here are some sobering statistics:

- 85% of AI projects fail (Pactera Technologies, 2019; Gartner, 2017)

- 87% of data science projects never make it to production (Venture Beat, 2019)

- Things are so bleak that in 2019, Gartner predicted that “through 2022, only 20% of analytic insights will deliver business outcomes” (Gartner, 2019)

Those are quite staggering numbers. Now, the reasons behind the failures of these projects are numerous: bad data, poor access to data, poor data science practices, etc. But I wish to argue my case that a significant part of the problem is that AI (this would also encompass data science) is over-hyped, i.e. that we believe too much in data and especially too much in AI’s capabilities. There seems to be a widely held belief that we can throw AI at anything and it will find an appropriate solution.

Let’s take a look at some of the projects that have failed in the last few years.

In 2020 two professors and a graduate student from Harrisburg University in Pennsylvania announced that they are publishing a paper entitled “A Deep Neural Network Model to Predict Criminality Using Image Processing“. This paper purported the following:

With 80 percent accuracy and with no racial bias, the software can predict if someone is a criminal based solely on a picture of their face. The software is intended to help law enforcement prevent crime.

What’s more, this paper was accepted for publication at the prestigious Springer Nature journal. Thankfully, a backlash ensued among the academic community that condemned the paper and Springer Nature confirmed on Twitter that the paper was to be retracted.

Funnily enough, a paper on pretty much the identical topic was also due to be published in the Journal of Big Data that same year entitled: “Criminal tendency detection from facial images and the gender bias effect“. This paper was also retracted.

It is mind-boggling to think that people, moreover experienced academics, could possibly believe that faces can disclose potential criminal tendencies in a person. Some people definitely have a mug that if spotted in a dark alley in the middle of the night would give anybody a heart attack, but this is still not an indicator that the person is a criminal.

Has common sense been thrown out the door? Are AI and data science perceived as great omniscient entities that should be adored and definitely not ever questioned?

Let’s see what other gaffs have occurred in the recent past.

In 2020, as the pandemic was in full swing, university entrance exams in the UK (A-levels) were cancelled. So, the British government decided to develop an AI algorithm to automatically grade students instead. Like that wasn’t going to backfire!? A perfect example, however, of when too much trust is put in artificial intelligence, especially by the cash-stricken public sector. The whole thing turned into a scandal because, of course, the algorithm didn’t do its intended job. 40% of students had their grades lowered by virtue of the algorithm favouring those from private schools and wealthy areas. There was obviously demographic bias in the data used to train the model.

But the fact that an algorithm was used to directly make important, life-changing decisions impacting the public is a sign that too much trust is being placed in AI. There are some things that AI just cannot do – looking past raw data is one such thing (more on this in a later post).

This trend of over-trusting AI in the UK was revealed in 2020 to be much deeper than once thought, however. One study by the Guardian found that one in three councils were (in secret) using algorithms to help make decisions about benefit claims and other welfare issues. The Guardian also found that about 20 councils have stopped using an algorithm to flag claims as “high risk” for potential welfare fraud. Furthermore, Hackney council in East London abandoned using AI to help predict which children were at risk of neglect and abuse. And then, the Home Office was embroiled in a scandal of its own when it was revealed that its algorithm to determine visa eligibility allegedly had racism entrenched in it. And the list goes on.

Dr Joanna Redden from the Cardiff Data Justice Lab who worked on researching why so many algorithms were being cancelled said:

[A]lgorithmic and predictive decision systems are leading to a wide range of harms globally, and also that a number of government bodies across different countries are pausing or cancelling their use of these kinds of systems. The reasons for cancelling range from problems in the way the systems work to concerns about negative effects and bias.

Indeed, perhaps it’s time to stop placing so much trust in data and algorithms? Enough is definitely not being said about the limitations of AI.

The media and charismatic public figures are not helping the cause either. They’re partly to blame for these scandals and failures that are causing people grief and costing the taxpayers millions because they keep this hype alive and thriving.

Indeed, level-headedness never makes the headlines – only sensationalism does. So, when somebody like the billionaire tech-titan Elon Musk opens his big mouth, the media laps it up. Here are some of the things Elon has said in the past about AI.

In 2017:

I have exposure to the most cutting edge AI, and I think people should be really concerned by it… AI is a fundamental risk to the existence of human civilization.

2018:

I think that [AI] is the single biggest existential crisis that we face and the most pressing one.

2020:

…we’re headed toward a situation where A.I. is vastly smarter than humans and I think that time frame is less than five years from now.

Please, enough already! Anybody with “exposure to the most cutting edge AI” would know that as AI currently stands, we are nowhere near developing anything that will “vastly outsmart” us by 2025. As I’ve said before (here and here), the engine of AI is Deep Learning, and all evidence points to the fact that this engine is in overdrive – i.e. that we’re slowly reaching its top speed. We soon won’t be able to squeeze anything more out of it.

But when Elon Musk says stuff like this, it captures people’s imaginations and it makes the papers (e.g. CNBC and The New York Times). He’s lying, though. Blatantly lying. Why? Because Elon Musk has a vested interest in over-hyping AI. His companies thrive on the hype, especially Tesla.

Here’s proof that he’s a liar. Elon has predicted for nine years in a row, starting in 2014, that autonomous cars are at most a year away from mass production. I’ll say that once again: for nine years in a row, Elon has publicly stated that autonomous cars are only just around the corner. For example:

2016:

My car will drive from LA to New York fully autonomously in 2017

It didn’t happen. 2019:

I think we will be feature-complete full self-driving this year… I would say that I am certain of that. That is not a question mark.

It didn’t happen. 2020:

I remain confident that we will have the basic functionality for level five autonomy complete this year… I think there are no fundamental challenges remaining for level five autonomy.

It didn’t happen. 2022:

And my personal guess is that we’ll achieve Full Self-Driving this year, yes.

It’s not going to happen this year, either, for sure.

How does he get away with it? Maybe because the guy oozes charisma? It’s obvious, though, that he makes money by talking in this way. Those, however, working directly in the field of AI like myself have had enough of his big mouth. Here’s, for example, Jerome Pesenti, head of AI at Facebook, venting his frustration at Elon on Twitter:

I believe a lot of people in the AI community would be ok saying it publicly. @elonmusk has no idea what he is talking about when he talks about AI. There is no such thing as AGI and we are nowhere near matching human intelligence. #noAGI

— Jerome Pesenti (@an_open_mind) May 13, 2020

Jerome will never make the papers by talking down AI, though, will he?

There was a beautiful example of how the media goes crazy over AI only recently, in fact. A month ago, Google developed its own new language model (think: chatbot) called LaMDA, which is much like GPT-3. It can sometimes hold very realistic conversations. But ultimately it is still just a machine – as dumb as a can of spaghetti. The chatbot follows simple processes behind the scenes, as Business Insider reports.

However, there was one engineer at Google, Blake Lemoine, who wanted to make a name for himself and who decided to share some snippets of his conversations with the program to make a claim that the chatbot has become sentient. (Sigh).

Here are some imagination-grabbing headlines that ensued:

- Google AI researcher [says] that its AI system is a ‘child’ that has the potential to ‘do bad things’ and says company has not thought through its implications (Daily Mail)

- Does this AI know it’s alive? (Vox).

- Dangers Of AI: Why Google Doesn’t Want To Talk About Its Sentient Chatbot (Outlook India)

- The Google engineer who thinks the company’s AI has come to life (The Washington Post)

Blake Lemoine is loving the publicity. He now claims that the AI chatbot has hired itself a lawyer to defend its rights and that they are also now friends. Cue the headlines again (I’ll spare you the list of eye-rolling, tabloid-like articles).

Google has since suspended its engineer for causing this circus and released the following statement:

Our team — including ethicists and technologists — has reviewed Blake’s concerns… and have informed him that the evidence does not support his claims. He was told that there was no evidence that LaMDA was sentient (and lots of evidence against it). [emphasis mine]

I understand how these “chatbots” work, so I don’t need to see any evidence against Blake’s claims. LaMDA just SEEMS sentient SOMETIMES. And that’s the problem. If you only share cherry-picked snippets of an AI entity, if you only show the parts that are obviously going to make headlines, then of course, there will be an explosion in the media about it and people will believe that we have created a Terminator robot. If, however, you look at the whole picture, there is no way that you can attain the conviction that there is sentience in this program (I’ve written about this idea for the GPT-3 language model here).

Conclusion

This ends my discussion on the topic that AI is over-hyped. So many projects are failing because of it. We as taxpayers are paying for it. People are getting hurt and even dying (more on this later) because of it. The media needs to stop stoking the fire because they’re not helping. People like Elon Musk need to keep their selfish mouths shut. And more level-headed discussions need to take place in the public sphere. I’ve written about such discussions before in my review of “AI Superpowers” by Kai-Fu Lee. He has no vested interest in exaggerating AI and his book, hence, is what should be making the papers, not some guy called Blake Lemoine (who also happens to be a “pagan/Christian mystic priest”, whatever that means).

In my next post I will extend this topic and discuss it in the context of autonomous cars.

To be informed when new content like this is posted, subscribe to the mailing list:

6 Replies to “Artificial Intelligence is Over-Hyped”