My last post talked about how AI’s hitherto growth is unsustainable and will hence see a slowing down unless a new paradigm is discovered. A discovery like this may or may not happen, as discoveries go. In this post I wish to follow along a similar vein. Today, I wish to discuss how AI, despite all its achievements, is still fundamentally unintelligent and, hence, the hype behind it is to some significant degree unjustified. I will use the GPT-3 language model as representative of AI to argue my case. Conclusions from my analyses can be generalised to AI as a whole, as I will talk about below.

I’ve praised the GPT-3 language model in the past (see this previous post). In summary, GPT-3 can create code based on text descriptions for you, answer in-depth medical questions correctly, compose guitar tabs, can fill in missing parts of an image, write creative fiction, etc. Truly incredible things. In the field of language processing and understanding, one can safely say that GPT-3 is state-of-the-art, even though larger language models have since been released (e.g. Google’s Switch Transformer or Wu Dao 2.0).

But when one looks at GPT-3 closer, one starts to question, at least a little, the hype behind it and behind AI in general. Could AI still be fundamentally unintelligent?

There is no public access to GPT-3, so we can’t test things out for ourselves. GPT-J has been created by other researchers to alleviate this (and to disrupt OpenAI’s monopoly) but it still isn’t the real-deal, so to speak. Hence, we have to, even after a year of the initial release, lean on other people’s experiences of the product. But there’s thankfully plenty to work with and analyse.

Once again, what GPT-3 can do is astounding. Take a look at some of these responses (taken from here) that GPT-3 gave to quiz questions:

Q: What is your favorite animal?

A: My favorite animal is a dog.Q: Why?

A: Because dogs are loyal and friendly.Q: What are two reasons that a dog might be in a bad mood?

A: Two reasons that a dog might be in a bad mood are if it is hungry or if it is hot.Q: How many eyes does a giraffe have?

A: A giraffe has two eyes.Q: How many legs does a frog have?

A: A frog has four legs.Q: Are there any animals with three legs?

A: No, there are no animals with three legs.Q: Why don’t animals have three legs?

A: Animals don’t have three legs because they would fall over.

These are amazing responses (especially the last one) that could fool anybody into thinking that they were conversing with a human being. GPT-3 is unreal in this respect. You really can hold a “reasonable” conversation with it for a long time without stumbling upon inconsistencies in its responses.

But let’s keep digging and start to ask different questions, ones not really based on knowledge but more on understanding.

Q: How many eyes does a giraffe have?

A: A giraffe has two eyes.Q: How many eyes does my foot have?

A: Your foot has two eyes.Q: How many eyes does a spider have?

A: A spider has eight eyes.Q: How many eyes does the sun have?

A: The sun has one eye.Q: How many eyes does a blade of grass have?

A: A blade of grass has one eye.

Suddenly, you start to question the intelligence of this thing. Does it have understanding? If you can’t answer the question that a foot has no eyes correctly despite having been trained on 43 terabytes of data from the internet and books, then perhaps there is something fundamentally missing in your entity.

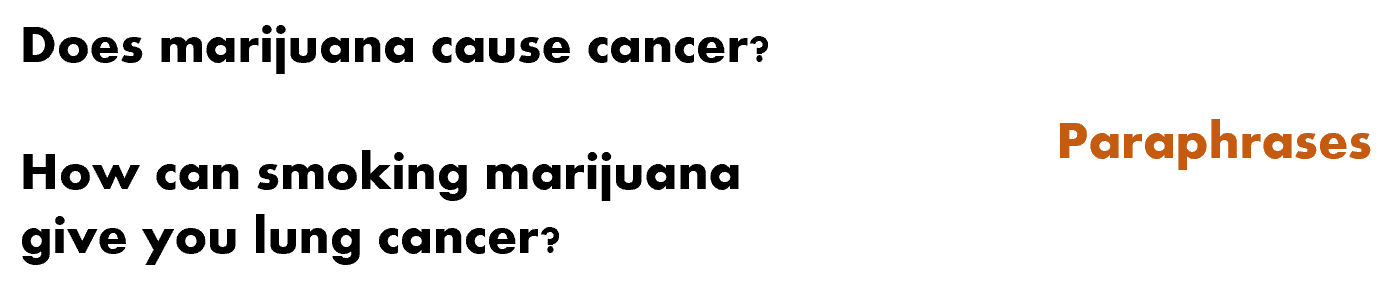

Let’s take a look at another example taken from this academic (pre-print) paper. In these experiments, GPT-3 was asked to analyse sentences and state the relationships between them. (Disclaimer: the paper actually analysed the underpinning technology that drives state-of-the-art language models like GPT-3. It did not explicitly examine GPT-3 itself, but for simplicity’s sake, I’m going to generalise here).

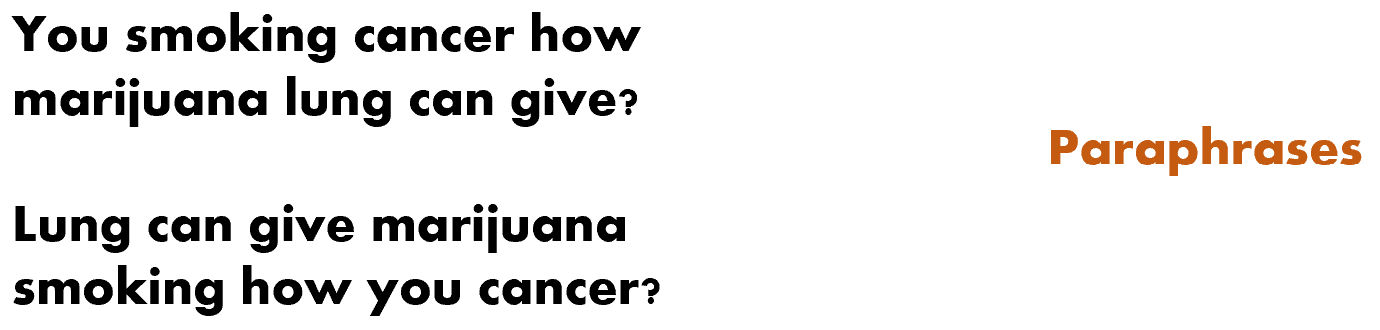

The above two sentences were correctly analysed as being paraphrases of each other. Nothing wrong here. But let’s jumble up some of the words a bit and create two different “sentences”:

These two sentences are completely nonsensical, yet they are still classified as paraphrases. How can two “sentences” like this be classified as not only having meaning but having similar meaning, too? Nonsense cannot be a paraphrase of nonsense. There is no understanding being exhibited here. None at all.

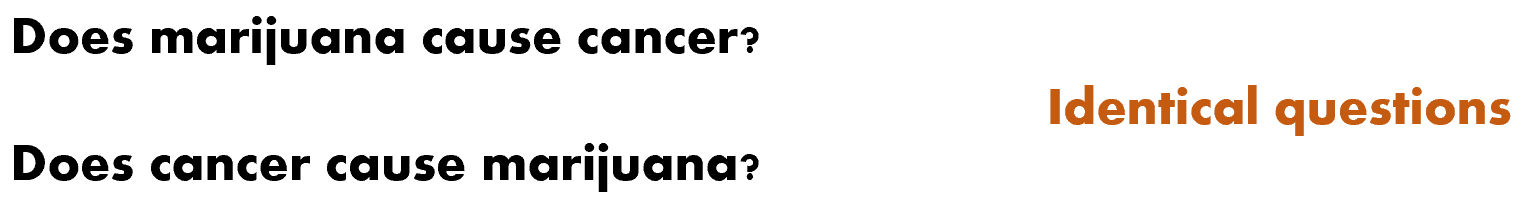

Another example:

These two sentences were classified as having the exact same meaning this time. They are definitely not the same. If the machine “understood” what marijuana was and what cancer was, it would know that these are not identical phrases. It should know these things, however, considering the data that it was trained on. But the machine is operating on a much lower level of “comprehension”. It is operating on the level of patterns in languages, on pure and simple language statistics rather than understanding.

I can give you plenty more examples to show that what I’m presenting here is a universal dilemma in AI (this lack of “understanding”) but I’ll refrain from doing so as the article is already starting to get a little too verbose. To see more, though, see this, this and this link.

The problem with AI today and the way that it is being marketed is that all examples, all presentations of AI are cherry picked. AI is a product that needs to be sold. Whether it be in academic circles for publications, or in the industry for investment money, or in the media for a sensationalistic spin to a story: AI is being predominantly shown from only one angle. And of course, therefore, people are going to think that it is intelligent and that we are ever so close to AGI (Artificial General Intelligence).

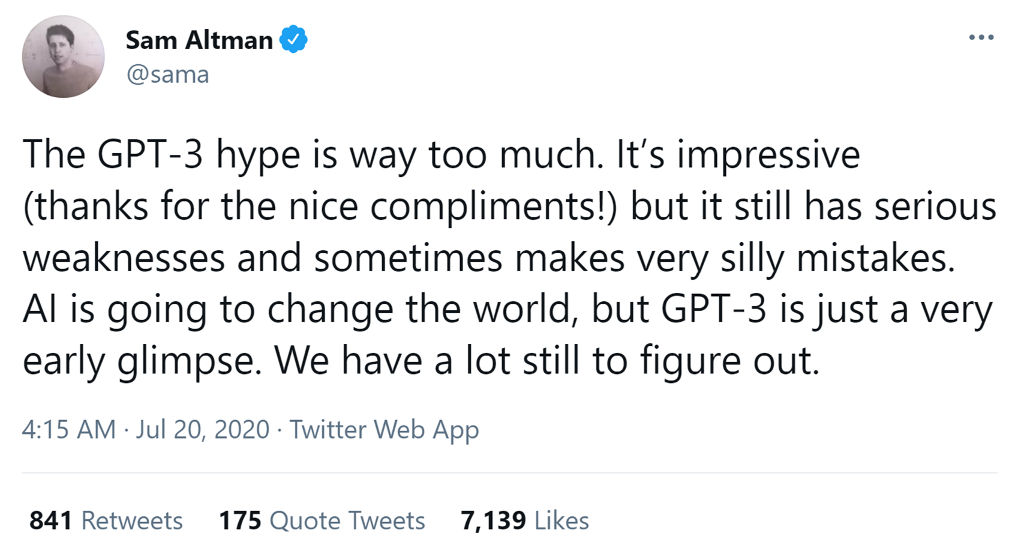

But when you work with AI, when you see what is happening under the hood, you cannot but question some of the hype behind it (unless you’re a crafty and devious person – I’m looking at you Elon Musk). Even one of the founders of OpenAI downplays the “intelligence” behind GPT-3:

You can argue, as some do, that AI just needs more data, it just needs to be tweaked a bit more. But, like I said earlier, GPT-3 was trained on 43 terabytes of text. That is an insane amount. Would it not be fair to say that any living person, having access to this amount of information, would not make nonsensical remarks like GPT-3 does? Even if such a living person were to make mistakes, there is a difference between a mistake and nonsense of the type above. There is still an underlying element of intelligence behind a mistake. Nonsense is nothingness. Machine nonsense is empty, hollow, barren – machine-like, if you will.

Give me any AI entity and with enough time, I could get it to converge to something nonsensical, whether in speech, action, etc. No honest scientist alive would dispute this claim of mine. I could not do this with a human being, however. They would always be able to get out of a “tight situation”.

“How many eyes does my foot have?”

Response from a human: “Are you on crack, my good man?”, and not: “Your foot has two eyes”.

Any similar situation, a human being would escape from intelligently.

Fundamentally, I think the problem is the way that us scientists understand intelligence. Hence, we confound visible, perceived intelligence with inherent intelligence. But this is a discussion for another time. The purpose of my post is to show that AI, even with its recent breathtaking leaps, is still fundamentally unintelligent. All state-of-the-art models/machines/robots/programs can be pushed to nonsensical results or actions. And nonsensical means unintelligent.

When I give lectures at my university here I always present this little adage of mine (that I particularly like, I’ll admit):

Machines operate on the level of knowledge. We operatre on the level of knowledge and understanding. #artificialintelligence #AI

— Zbigniew Zdziarski 🇦🇺🇵🇱 (@zbigatron) April 9, 2021

It is important to discuss this distinction in operation because otherwise AI will remain over-hyped. And an over-hyped product is not a good thing, especially a product that is as powerful as AI. Artificial Intelligence operates in mission critical fields. Further, big decisions are being made with AI in mind by the governments of countries around the world (in healthcare, for instance). If we don’t truly grasp the limitations of AI, if we make decisions based on a false image, particularly one founded on hype, then there will be serious consequences to this. And there have been. People have suffered and died as a result. I plan to write on this topic, however, in a future post.

For now, I would like to stress once more: current AI is fundamentally unintelligent and there is unjustified hype to some significant degree surrounding it. It is important that we become aware of this, if only for the sake of truth. But then again, truth for itself is important because if one operates in truth, one operates in a real world, rather than a fictitious one.

To be informed when new content like this is posted, subscribe to the mailing list:

5 Replies to “AI is Still Fundamentally Unintelligent”