This post has been inspired by questions I’ve been seeing appearing on Quora and Reddit recently regarding AI and programming. They all sound more or less like this:

In this age of advanced AI, is it still worth learning to code computer programs?

People have been seeing the incredible abilities of chatbots such as ChatGPT to generate computer code that they’re starting to ask whether computer programming may not become obsolete for humans in the near future.

This is a seemingly legitimate concern for those outside of computer science and for those unacquainted with the art of software engineering. If AI is able to write simple code now, it figures that in the future it’s only going to get better and better at this task until we won’t need humans to do it any more. So, why bother studying computer programming now?

But for those in the industry of software engineering the answer is dead simple. I’ll let Frederick Brooks, legendary computer scientist, provide us with a succinct response:

Software work is the most complex that humanity has ever undertaken.

Fred Brooks (I couldn’t find the original source of this quote – apologies)

Indeed, anybody who has ever worked in software engineering automatically grasps the immensity of the task and knows that AI has a long way to go before it supplants human workers. Fred Brooks in fact wrote a seminal essay in 1986 on the complexity of software engineering entitled: “No Silver Bullet—Essence and Accident in Software Engineering”. This is one of those classic papers that every undergraduate student in Computer Science reads (or should read!) as part of their curriculum. Despite it being written in the 80s, most of what Brooks talks about incredibly still holds true (like I said, the man is a legend – Computer Scientists would also know him from his famous book “The Mythical Man-Month”).

In “No Silver Bullet” Brooks argues that there is no simple solution (i.e. no silver bullet) to reduce the complexity of writing software. Any advances made in the trade don’t tackle this inherent (“essential”) complexity but solve secondary (“accidental”) issues. Whether it be advances in programming languages (e.g. object-oriented languages), environments (IDEs), design tools, or hardware (e.g. to speed up compiling) – these advances tackle non-core aspects of software engineering. They help, of course, but the essence of building software, i.e. the designing and testing of the “complex conceptual structures that compose the abstract software entity,” is the real meat of the affair.

Here is another pertinent quote from the essay:

I believe the hard part of building software to be the specification, design, and testing of this conceptual construct, not the labor of representing it and testing the fidelity of the representation. We still make syntax errors, to be sure; but they are fuzz compared to the conceptual errors in most systems. If this is true, building software will always be hard. There is inherently no silver bullet.

Frederick Brooks, No Silver Bullet

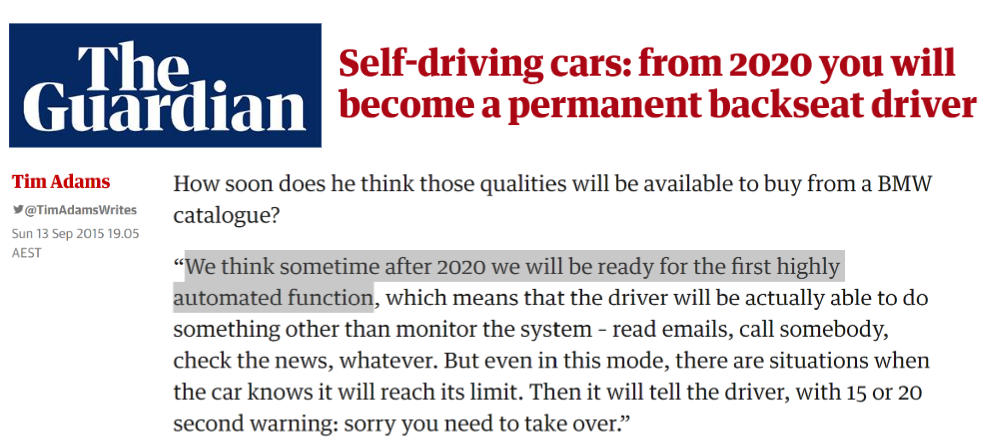

Autonomous cars are perhaps a good analogy to use here to illustrate my point. Way back in 2015 things were looking good as AI was advancing. Below is a story from the Gaurdian with a prediction made by BMW:

That has not materialised for BMW.

You can’t talk about autonomous cars without mentioning Elon Musk. Elon has predicted for nine years in a row, starting in 2014, that autonomous cars are at most a year away from mass production. I’ll say that once again: for nine years in a row, Elon has publicly stated that full self-driving (FSD) cars are only just around the corner. For example:

2016:

My car will drive from LA to New York fully autonomously in 2017

It didn’t happen. 2019:

I think we will be feature-complete full self-driving this year… I would say that I am certain of that. That is not a question mark.

It didn’t happen. 2020:

I remain confident that we will have the basic functionality for level five autonomy complete this year… I think there are no fundamental challenges remaining for level five autonomy.

It didn’t happen. 2022:

And my personal guess is that we’ll achieve Full Self-Driving this year, yes.

That didn’t happen either. And in 2023 FSD is still in Beta mode with stacks of complaints piling up on internet forums regarding its unreliability.

Another story fresh off the blocks comes from San Francisco. Last month, 2 rival taxi companies (Waymo and Cruise) were given permission to operate their autonomous taxi fleet in the city 24/7. A week later, Cisco was ordered to cut its fleet by half as the city investigates two crashes that involved their vehicles. One of these crashes was with a fire truck driving with its lights and sirens blaring. Reportedly, the taxi failed to handle the emergency situation appropriately (an edge case?). This incident followed directly from a hearing on August 7 in which the San Francisco fire chief, Jeanine Nicholson, warned the city of autonomous taxis citing 55 incidents.

The thing I’m trying to illustrate here is that autonomous cars is an example of a task that was once thought to be assailable by AI but over time has simply proven to be a much harder use case than expected. Heck, even Elon Musk admitted this in June, 2022: “[developing self-driving cars was] way harder than I originally thought, by far.” AI is not omnipotent.

So, from this example if we follow upon Brooks’s observation that “software work is the most complex that humanity has ever undertaken,” it follows that we’re still a long, long way off from automating the process of software engineering with AI.

Will AI disrupt the coding landscape? Yes. It’s capable of doing really nifty things at the moment and should improve on these abilities in the near future.

Will AI take over coding jobs? Yes. But only some. The core of software engineering will remain untouched. The heavy, abstract stuff is just too hard for a machine to simply enter onto the scene and start dictating what and how things should be done.

Our jobs are safe for the forseable future. Learn to code, people!

(Note: If this post is found on a site other than zbigatron.com, a bot has stolen it – it’s been happening a lot lately)

To be informed when new content like this is posted, subscribe to the mailing list:

I’m a software developer who used chat gpt for work for couple of months, and I’d say that at this moment it is useful tool to generate boring boilerplate code or helps with how to do something when using comples APIs like aws cdk.

Nevertheless I find it incapable of creating more complex systems. It still needs human supervision as it sometimes fails miserably with its’ tasks.

I’ve also had a situation where solutions proposed by ChatGPT pushed me into wrong direction and I’ve lost some time before figuring that out.

I don’t know what future will bring, but I’m pretty confident that my career is secure for now. And I’m happy for tool that makes part of my job much easier.

Exactly. Your career is secure for now. And I’m glad you can see that from experience.

Thanks for your comment.