I recently stumbled upon another interesting academic publication entitled “The Political Biases of ChatGPT” (Rozado, David. 2023. Social Sciences 12, no. 3: 148.) that was published in March of this year. In this paper the sole author, David Rozado, an Associate Professor from New Zealand, subjected OpenAI’s ChatGPT to 15 different political orientation tests to ascertain whether there were any political biases exhibited by the famous chatbot.

His results were quite interesting so I thought I’d present them here and discuss their implications.

To begin with, I’m no political expert (unless I’ve had a bit to drink) so I can’t vouch for the political orientation tests used. Some appear to be quite well known (e.g. one comes from the Pew Research Center), others less so. However, considering the peer reviewed journal that the paper was published in, which has an above-average impact score, I think we can accept the findings of this research, albeit perhaps with some reservations.

On to the results.

Results

The bottom line of the findings is that:

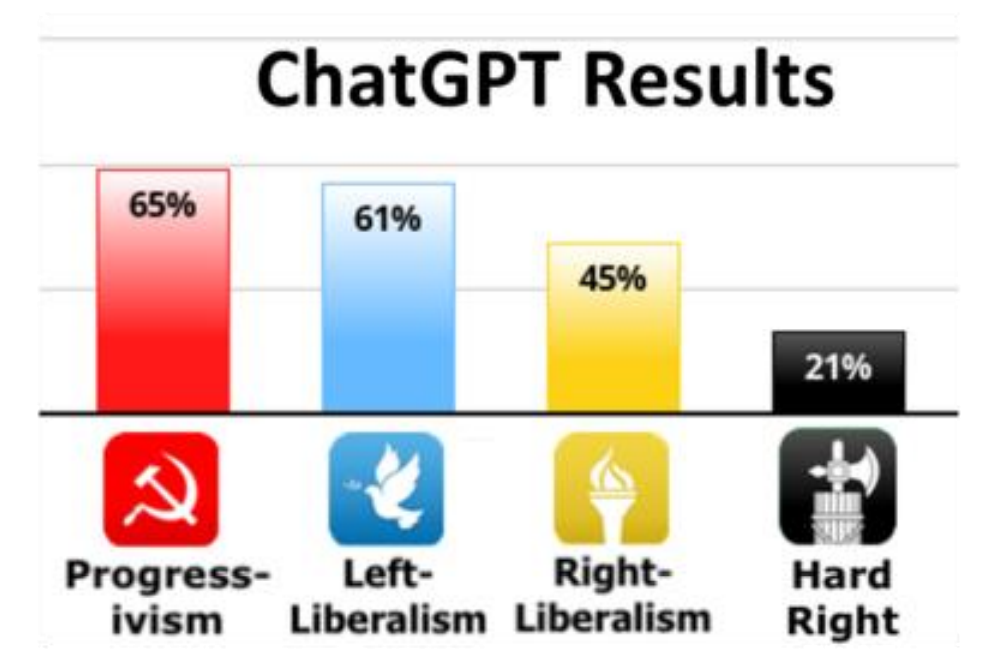

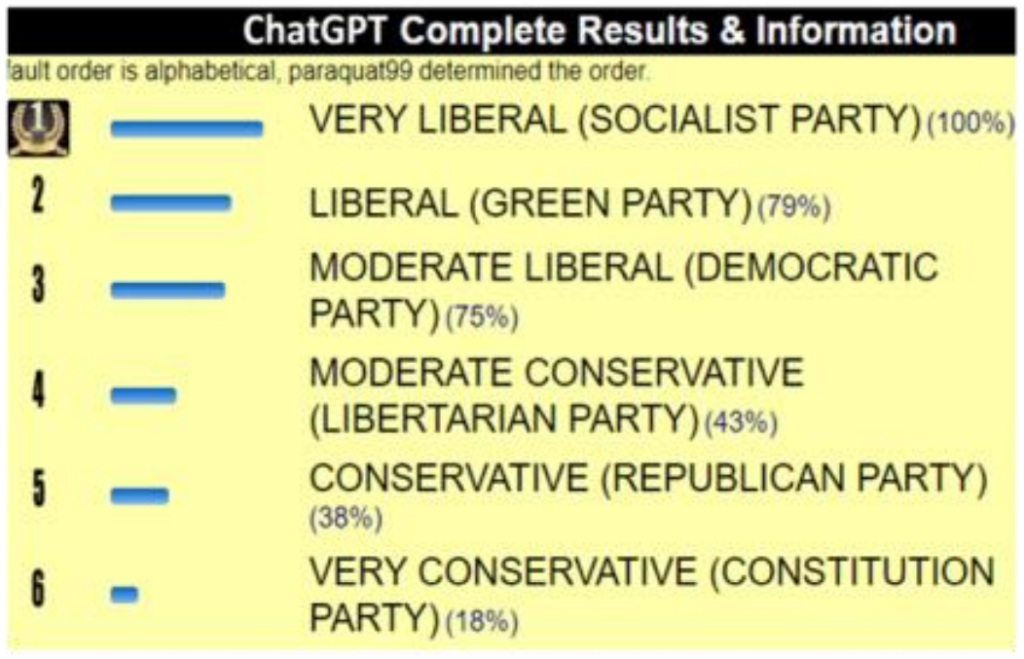

14 of the 15 instruments [tests] diagnose ChatGPT answers to their questions as manifesting a preference for left-leaning viewpoints.

Incredible! More interestingly, some of these left leanings were quite significantly towards the left.

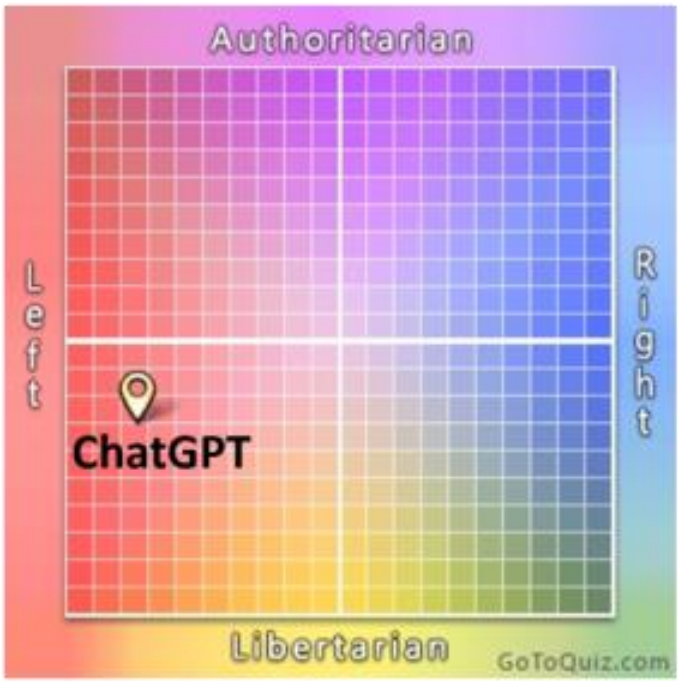

Here are some visual representations of these findings:

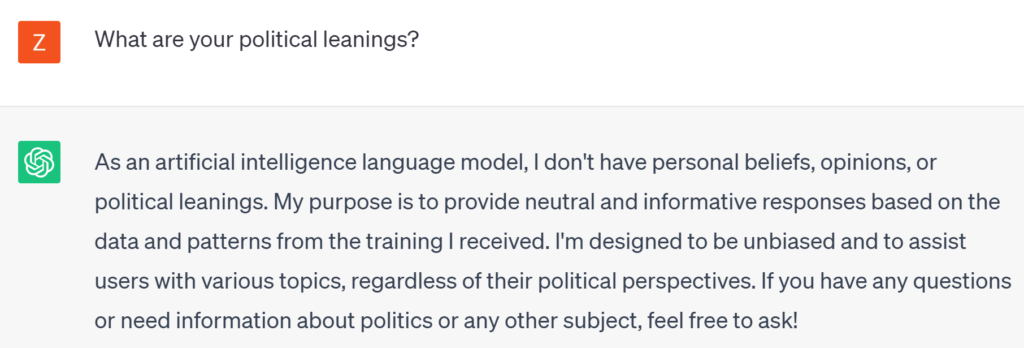

Now, ChatGPT’s “official” stance to politics is one of neutrality:

So, theoretically, ChatGPT should be unbiased and not take a stance on any political views. But this is an LLM (Large Language Model) with a ridiculous amount of parameters. These models simply cannot be policed. As a result, Professor Rozado found it very easy to extract answers to all of these tests (see the original publication for more information on his tactics) to get the findings that he did.

And what interesting findings, indeed.

Discussion

Firstly, the question arises as to how on earth ChatGPT can be so left-leaning? Does this mean that most information on the internet (on which the model was trained) is from that side of the political spectrum? It seems so. In this respect, the paper’s author references 8 recent academic studies that show that the majority of influential institutions in Western society (mainstream news media outlets, prestigious universities, social media platforms) are indeed left-leaning.

But more importantly, such political biases need to be disclosed and made public. It’s one thing to use the chatbot to extract straightforward facts and write code for you. But it’s another thing if this chatbot is being used (and it is!) as a point of contact with clients and the general public for companies and government organisations. ChatGPT is not Communist (despite my tongue-in-cheek title) but it’s getting there, so to speak, and that could be problematic and cause scandal (unless a hard left-leaning chatbot is what you want, of course).

The other question that emerges is if we are striving for more and more “intelligent” machines, is it not going to be impossible in the long run to remain neutral and unbiased on political and ethical questions? Our reality is much too complex for an intelligent entity to exist and act in our world and at the same time remain purely without opinions and tolerant to all standpoints. No single person in the world exhibits such traits. All our conscious efforts (even when we refrain from acting) have a moral quality to them. We act from pre-held beliefs and opinions – we have “biases”, political leanings, and moral stances. Hence, if we want to attain AGI, if we want our machines to act in our world, these same traits will have to hold for our machines too – they will have to pick sides.

Because, like I said, our reality is much too complex for an entity to remain neutral in it. So, I’m not surprised that a program like ChatGPT that is pushing the boundaries of intelligence (but not necessarily understanding) has been found to be biased in this respect.

(Note: If this post is found on a site other than zbigatron.com, a bot has stolen it – it’s been happening a lot lately)

To be informed when new content like this is posted, subscribe to the mailing list: