We were recently handed an open letter where it was pleaded that we pause giant AI experiments and in the meantime “ask ourselves…Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization?”

Prominent names in computer science, such as Elon Musk and Steve Wozniak are signatories to this letter and as a result it made headlines all over the world with the usual hype and pomp surrounding anything even remotely pertaining to AI.

Time magazine, for instance, posted this in an article only last month:

I refrained from signing because I think the letter is understating the seriousness of the situation and asking for too little to solve it… Many researchers steeped in these issues, including myself, expect that the most likely result… is that literally everyone on Earth will die.

Quote taken from this article.

We’re used to end-of-the-world talk like this, though, aren’t we? Prof Stephen Hawking in 2014 warned that “The development of full artificial intelligence could spell the end of the human race.” And of course we have Elon Musk who is at the forefront of this kind of banter. For example in 2020 he said: “We’re headed toward a situation where AI is vastly smarter than humans and I think that time frame is less than five years from now.”

The talk on the streets amongst everyday folk seems to be similar, too. How can it not be when the media is bombarding us with doom and gloom (because sensationalism is what sells papers, as I’ve said in previous posts of mine) and authority figures like those mentioned above are talking like this.

Is society scared of AI? I seem to be noticing this more and more. Other very prominent figures are trying to talk common sense to bring down the hype and have even publicly opposed the open letter from last month. Titans of AI like Yann LeCunn and Andrew Ng (who are 1,000 times greater AI experts than Elon Musk, btw) have said that they “disagree with [the letter’s] premise” and a 6-month pause “would actually cause significant harm“. Voices like this are not being heard, however.

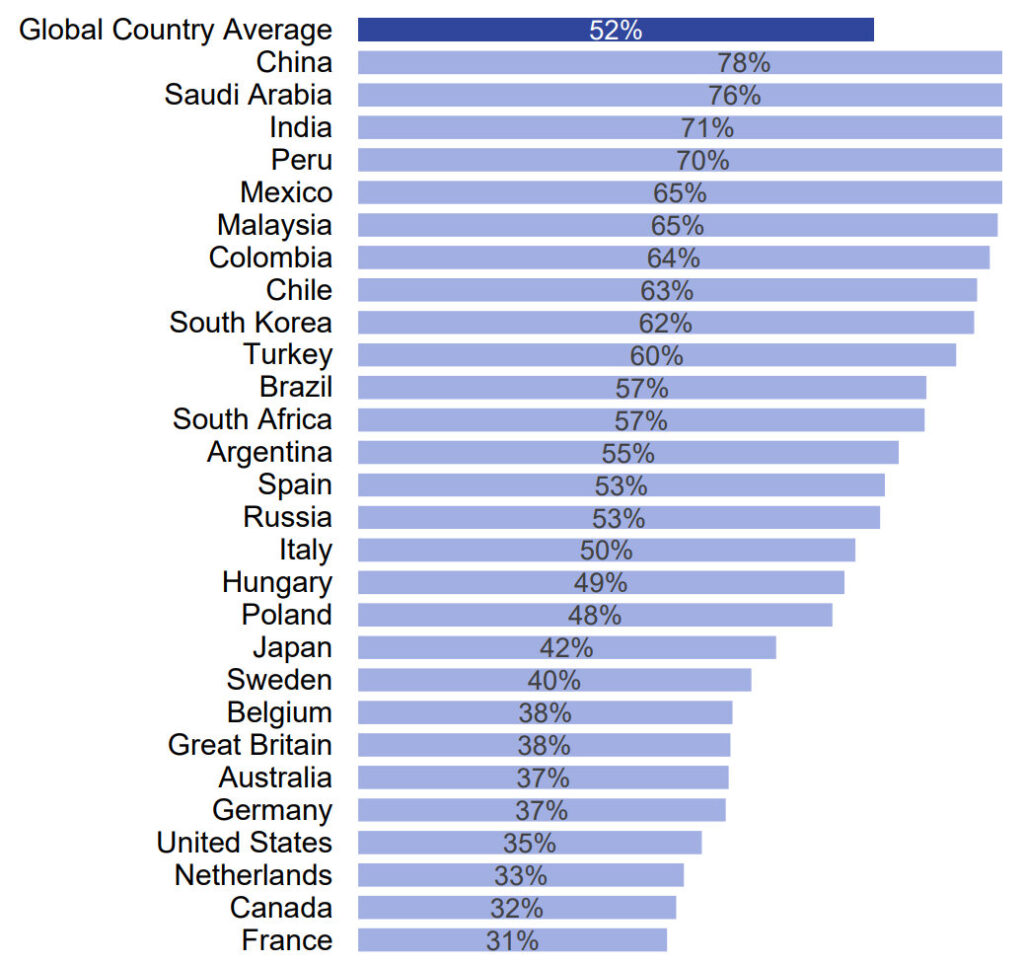

But then the other day while I was reading through the annual AI Index Report released by Stanford Institute for Human-Centered Artificial Intelligence (HAI) (over 300 pages of analysis capturing trends in AI) this particular graph stood out for me:

What struck me was how Asia and South America (Japan being the sole exception) want to embrace AI and are generally fans of it. Europe and the US, on the other hand, not so much.

This got me thinking: is this fear of AI only dominant in Europe and the US and if so, is it a cultural thing?

Now, off the bat, the reasons for Asia and South America embracing AI could be numerous and not necessarily cultural. For example, these countries are lower income countries and perhaps they see AI as being a quick solution to a better life in the present. Fair enough.

Also, the reasons behind Europe and the US eschewing AI could be purely economic and short-term as well: they fear the imminent disruption in jobs that can follow upon developments in technology rather than directly fearing an AI apocalypse.

In spite of all this and understanding that correlation does not necessarily entail causation, perhaps there’s something cultural to all of this, after all. The significant signatories to the recent open letter seem to have come purely from the US and Europe.

I had two teaching stints in India last year and one in the Philippines. One of the topics I lectured was AI and as part of a discussion exercise I got my students to debate with me on this very topic, i.e. whether we are capable at all in the near or distant future of creating something that will outsmart and then annihilate us. The impression that I got was that the students in these countries had a much deeper appreciation for the uniqueness of human beings as compared to machines. There was something intrinsically different in the way that they referred to AI as compared to the people in my home country of Australia and second home of Europe with whom I talk to on a daily basis.

Of course, these are just my private observations and a general “feeling” that I got while working in those two countries. The population size of the experiment would be something like 600 and even then it was not possible for me to get everybody’s opinion on the matter let alone request all my classes to complete a detailed survey.

Regardless, I think I’m raising an interesting question.

Could the West’s post-Descartes and post-Enlightenment periods have created in us a more intrinsic feeling that rationality and consciousness are things that are easily manipulated and simulated and then ultimately enhanced? Prior to the Enlightenment, man was whole (that is, consciousness was not a distinct element of his existence) and any form of imitation of his rationality would have been regarded as always being inferior regardless of how excellent the imitation could have been.

The Turing test would not have been a thing back then. Who cares if somebody is fooled by a machine for 15 minutes? Ultimately it is still a machine and something inherently made of just dead matter that could never transcend into the realm of understanding, especially that of abstract reality. It could mimic such understanding but never possess it. Big difference.

Nobody would have been scared of AI back then.

Then came along Descartes and the Enlightenment period. Some fantastic work was done during this time, don’t get me wrong, but we as humans were transformed into dead, deterministic automata as well. So, it’s no wonder we believe that AI can supersede us and we are afraid of it.

The East didn’t undergo such a period. They share a different history with different philosophies and different perceptions of life and people in general. I’m no expert on Eastern Philosophies (my Master’s in Philosophy was done purely in Western Thought) but I would love for somebody to write a book on this topic: How the East perceives AI and machines.

And then perhaps we could learn something from them to give back the dignity to mankind that it deserves and possesses. Because we are not just deterministic machines and the end of civilisation is not looming over us.

Parting Words

I am not denying here that AI is not going to improve or be disruptive. It’s a given that it will. And if a pause is needed it is for one reason: to ensure that the disruption isn’t too overwhelming for us. In fairness, the Open Letter of last month does state something akin to this, i.e. “Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable.” The general vibe of the letter, nonetheless, is one of doom, gloom, and oblivion, and this is what I’ve wanted to address in my article.

Secondly, I realise that I’ve used the East/West divide a little bit erroneously because South America is commonly counted as a Western region. However, I think it’s safe to say that Europe and the US are traditionally much closer culturally to each other than they are respectively with South America. The US has a strong Latino community but the Europe-US cultural connection is a stronger one. To be more precise I would like to have entitled my article “Europe and the USA Fear Artificial Intelligence, Asia and South America Do Not” but that’s just a clunky title for a little post on my humble blog.

Finally, I’ll emphasise again that my analysis is not watertight. Perhaps, in fact, I’m clutching at straws here. However, maybe there just is something to my question that the way the “East” perceives AI is different and that we should be listening to their side of the story more in this debate on the future of AI research than we currently are.

To be informed when new content like this is posted, subscribe to the mailing list (or subscribe to my YouTube channel!):