There have been a number of moments in my career in AI when I have been taken aback by the progress mankind has made in the field. I recall the first time I saw object detection/recognition being performed at near-human level of accuracy by Convolutional Neural Networks (CNNs). I’m pretty sure it was this picture from Google’s MobileNet (mid 2017) that affected me so much that I needed to catch my breath and immediately afterwards exclaim “No way!” (insert expletive in that phrase, too):

When I first started out in Computer Vision way back in 2004 I was adamant that object recognition at this level of expertise and speed would be simply impossible for a machine to achieve because of the inherent level of complexity involved. I was truly convinced of this. There were just too many parameters for a machine to handle! And yet, there I was being proven wrong. It was an incredible moment of awe, one which I frequently recall to my students when I lecture on AI.

Since then, I’ve learnt to not underestimate the power of science. But I still get caught out from time to time. Well, maybe not caught out (because I really did learn my lesson) but more like taken aback.

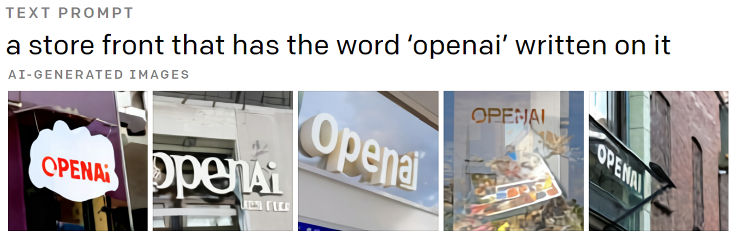

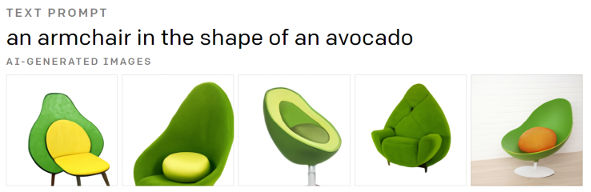

The second memorable moment in my career when I pushed my swivel chair away from my desk and once more exclaimed “No way!” (insert expletive there again) was when I saw image-to-text translation (you provide a text prompt and a machine creates images based on it) being performed by DALL-E in January of 2021. For example:

I wrote about DALL-E’s initial capabilities at the end of this post on GPT3. Since then, OpenAI has released DALL-E 2, which is even more awe-inspiring. But that initial moment in January of last year will forever be ingrained in my mind – because a machine creating images from scratch based on text input is something truly remarkable.

This year, we’ve seen text-to-image translation become mainstream. It’s been on the news, John Oliver made a video about it, various open source implementations have been released to the general public (e.g. DeepAI – try it out yourself!), and it has achieved some milestones – for example, Cosmopolitan magazine used a DALL-E 2 generated image as a cover on a special issue of theirs:

That does look groovy, you have to admit.

My third “No way!” moment (with expletive, of course) occurred only a few weeks ago. It happened when I realised that text-to-video translation (you provide a text prompt and a machine creates a series of videos based on it) is likewise on its way to potentially become mainstream. 4 weeks ago (Oct 2022) Google presented ImagenVideo and a short time later also published another solution called Phenaki. A month earlier to this, Meta’s text-to-video translation application was announced called Make-A-Video (Sep 2022), which in turn was preceded by CogVideo by Tsinghua University (May 2022).

All of these solutions are in their infancy stages. Apart from Phenaki, videos generated after providing an initial text input/instruction are only a few seconds in length. No generated videos have audio. Results aren’t perfect with distortions (aka artefacts) clearly visible. And the videos that we have seen have undoubtedly been cherry-picked (CogVideo, however, has been released as open source to the public so one can try it out oneself). But hey, the videos are not bad either! You have to start somewhere, right?

Let’s take a look at some examples generated by these four models. Remember, this is a machine creating videos purely from text input – nothing else.

CogVideo from Tsinghua University

Text prompt: “A happy dog” (video source)

Here is an entire series of videos created by the model that is presented on the official github site (you may need to press “play” to see the videos in motion):

As I mentioned earlier, CogVideo is available as open source software, so you can download the model yourself and run it on your machine if you have an A100 GPU. And you can also play around with an online demo here. The one down side of this model is that it only accepts simplified Chinese as text input, so you’ll need to get your Google Translate up and running, too, if you’re not familiar with the language.

Make-A-Video from Meta

Some example videos generated from text input:

The other amazing features of Make-A-Video are that you can provide a still image and get the application to give it motion, or you can provide 2 still images and the application will “fill-in” the motion between them, or you can provide a video and request different variations of this video to be produced.

Example – left image is input image, right image shows generated motion for it:

It’s hard not to be impressed by this. However, as I mentioned earlier, these results are obviously cherry-picked. We do not have access to any API or code to produce our own creations.

ImagenVideo from Google

Google’s first solution attempts to build on the quality of Meta’s and Tsinghua University’s releases. Firstly, the resolution of videos has been upscaled to 1024×768 with 24 fps (frames per second). Meta’s videos by default are created with 256 x 256 resolution. Meta mentions, however, that max resolution can be set to 768 x 768 with 16 fps. CogVideo has similar limitations to their generated videos.

Here are some examples released by Google from ImagenVideo:

Google claims that the videos generated surpass those of other state-of-the-art models. Supposedly, ImagenVideo has a better understanding of the 3D world and can also process much more complex text inputs. If you look at the examples presented by Google on their project’s page, it appears as though their claim is not unfounded.

Phenaki by Google

This is a solution that really blew my mind.

While ImagenVideo had its focus on quality, Phenaki, which was developed by a different team of Google researchers, focussed on coherency and length. With Phenaki, a user can present a long list of prompts (rather than just one) that the system then takes and creates a film of arbitrary length. Similar kinds of glitches and jitteriness are exhibited in these generated clips, but the fact that videos can be created of two-minute plus length, is just astounding (although of lower resolution). Truly.

Here are some examples:

Phenaki can also generate videos from single images, but these images can additionally be accompanied by text prompts. The following example uses the input image as its first frame and then builds on that by following the text prompt:

For more amazing examples like this (including a few 2+ minute videos), I would encourage you to view the project’s page.

Furthermore, word on the street is that the team behind ImagenVideo and Phenaki are combining strengths to produce something even better. Watch this space!

Conclusion

A few months ago I wrote two posts on this blog discussing why I think AI is starting to slow down (part 2 here) and that there is evidence that we’re slowly beginning to hit the ceiling of AI’s possibilities (unless new breakthroughs occur). I still stand by that post because of the sheer amount of money and time that is required to train any of these large neural networks performing these feats. This is the main reason I was so astonished to see text-to-video models being released so quickly after only just getting used to their text-to-image counterparts. I thought we would be a long way away from this. But science found a way, didn’t it?

So, what’s next in store for us? What will cause another “No way!” moment for me? Text-to-music generation and text-to-video with audio would be nice wouldn’t it? I’ll try to research these out and see how far we are from them and present my findings in a future post.

To be informed when new content like this is posted, subscribe to the mailing list:

One Reply to “AI Video Generation (Text-To-Video Translation)”