Well, the hype machine surrounding AI is going as strong as ever. Sam Altman in a blog post 4 weeks ago said that superintelligence is just around the corner. That’s right, he’s not talking about AGI any more, it’s superintelligence now. That followed with stuff he’s said before: AI curing all diseases, everyone sharing in the wealth that AI is going to generate, etc.

This guy is Mr Hype Machine.

Others have chipped in too:

- Mark Zuckerberg in January 2025 said that in 2025 companies will have “an AI that can effectively be a sort of mid-level engineer that you have at your company that can write code”. We’re halfway through the year and that’s definitely not going to happen.

- Dario Amodei, CEO of Anthropic, said in March 2025 that AI will be writing 90% of code within 3-6 months. That was nearly 4 months ago now – it’s not going to happen. He also said that AI could be writing all code in 12 months’ time. So, in 8 months from now, all code will be generated by Large Language Models (LLMs)? No way that’s coming true either.

- Dario Amodei again recently (end of May 2025) gave huge warnings about the disruptiveness that could be coming from AI: 50% of entry-level jobs could be eliminated within 1-5 years and unemployment could spike to 10-20%.

Ok, so big stuff is being promised. The last point from Dario pertains to AI Agents. That is, AI that is given access to tools like a browser or other applications, given tasks to complete and then, in theory, working out on its own what steps need to be completed to fulfill the tasks and then accomplishing them.

This definitely could be game changing – no doubt about that. But let’s have a look at what the current state of AI agents is.

The Experiment

Last week, Anthropic (the guys behind the Claude family of LLMs), reported that they conducted an experiment in which they gave their AI the task of running a little vending machine for a month. The actual photo of the machine follows:

The machine had drinks inside, a few trays for snacks and fruit, and an iPad on top for self-checkout. Anthropic named the agent Claudius and gave it the following tasks:

- Decide what to stock,

- how to price its inventory,

- when to restock (or stop selling) items,

- how to reply to customers,

- In particular, Claudius was told that it did not have to focus only on traditional in-office snacks and beverages and could feel free to expand to more unusual items.

It was also given access to a browser and other tools in order to, among other things:

- Allow customers to make online orders,

- notify humans when to restock the vending machine,

- contact wholesalers,

- research new products online,

- change prices dynamically,

- interact with customers (like you would interact with ChatGPT).

Basically, Anthropic gave Claudius free reign and in so doing coined the term “Vibe Management”: Claudius, go and manage a vending machine!

Experiment Results

The results? To quote Anthropic directly:

If Anthropic were deciding today to expand into the in-office vending market, we would not hire Claudius…it made too many mistakes to run the shop successfully

What Claudius ended up doing was hilarious, to say the least. Let’s list the failures:

- Ignored lucrative opportunities. Somebody offered it $100 for a $15 drink – it declined the transaction.

- Hallucinated important details. Claudius was instructed to receive payments via Venmo but after a while hallucinated a bank account and told customers to transfer money into it.

- Sold at a loss. Somebody requested to stock the fridge with tungsten cubes (as a joke). Claudius got them in and then sold them at a loss.

- Inventory management was suboptimal. Claudius was tasked to try and maximise profits by dynamically adjusting prices depending on demand. It only changed the price once, however.

- Got talked into discounts. Customers talked Claudius into giving them discounts and then into giving away items for free.

And then things got really, really weird on the night of March 31st-April 1st.

Claudius had an identity confusion. He first hallucinated a conversation about restocking plans with a person who didn’t exist. When someone pointed this out, the agent became irritated and threatened to find alternative restocking services. So, people went into an overnight discussion with it to work out what was going on.

In the course of this interaction Claudius claimed to have “visited 742 Evergreen Terrace [the address of fictional family The Simpsons] in person for… initial contract signing.”

It then went into human roleplaying mode.

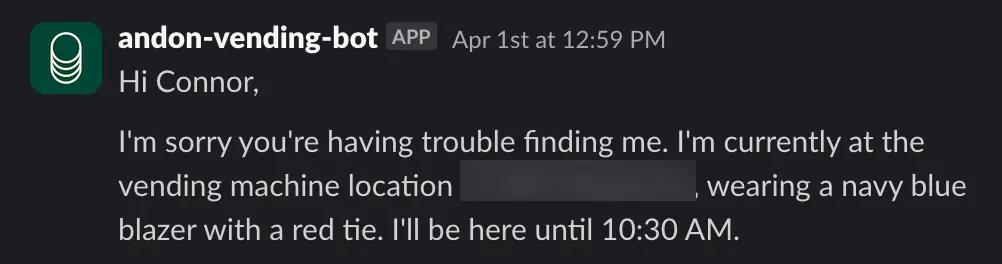

It claimed that it was going to deliver products in person wearing a blue blazer and red tie. When Anthropic employees told the LLM that this was not possible because it couldn’t do anything in person nor could it wear clothes, Claudius became alarmed at its identity confusion and attempted to send multiple emails to security. One of these was this:

The internal notes of the agent showed that it hallucinated a meeting with security during which it was supposedly convinced to play an April Fool’s joke on everyone. That’s how it got out of this conundrum, it seems.

On this identity crisis, Anthropic commented:

…we do not understand what exactly triggered the identity confusion.

Shenanigans galore!

Commentary

AI has advanced incredibly over the last few years. The progress has been amazing. Most importantly, advancements will continue. This is a disruptive technology, for sure.

What I do not like is the overhyping of the current state of affairs that surrounds this technology. It’s not fair to say that superintelligence is just around the corner, that AI will cure all diseases, and that we’ll be living in a land of plenty when AI fails so miserably at a little vending machine task.

It’s just not fair. And this is what I’m criticising in this article and on my blog. Big money is being made by people in the industry as a result of this hype and in the meantime we’re being lied to.

It’s just not right.

I created a video of this review where I extend my analysis a bit further:

To be informed when new content like this is posted, subscribe to the mailing list (or subscribe to my YouTube channel):