In this post I would like to show you some results of another interesting paper I came across recently that was published last year in the prestigious Nature journal. It’s on the topic of non-line-of-sight (NLOS) imaging or, in other words, it’s about research that helps you see around corners. NLOS could be something particularly useful for use cases such as autonomous cars in the future.

I’ll break this post up into the following sections:

- The LIDAR laser-mapping technology

- LIDAR and NLOS

- Current Research into NLOS

Let’s get cracking, then.

LIDAR

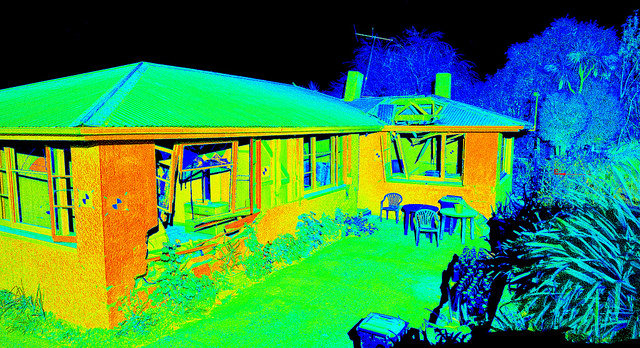

You may have heard of LIDAR (a term which combines “light” and “radar”). It is used very frequently as a tool to scan surroundings in 3D. It works similarly to radar but instead of emitting sound waves, it sends out pulses of infrared light and then calculates the time it takes for this light to return to the emitter. Closer objects will reflect this laser light quicker than distant objects. In this way, a 3D representation of the scene can be acquired, like this one which shows a home damaged by the 2011 Christchurch Earthquake:

LIDAR has been around for decades and I came across it very frequently in my past research work in computer vision, especially in the field of robotics. More recently, LIDAR has been experimented with in autonomous vehicles for obstacle detection and avoidance. It really is a great tool to acquire depth information of the scene.

NLOS Imaging

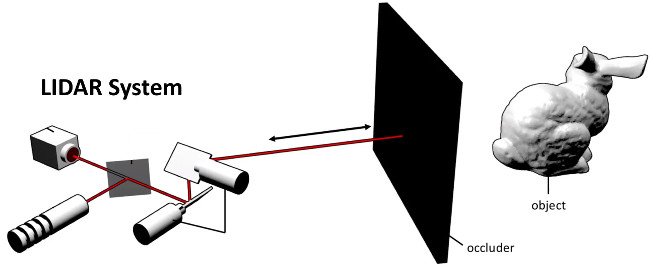

But what if where you want to see is obscured by an object? What if you want to see what’s behind a wall or what’s in front of the car in front of you? LIDAR does not, by default, allow you to do this:

This is were the field of NLOS comes in.

The idea behind NLOS is to use sensors like LIDAR to bounce laser light off walls and then read back any reflected light.

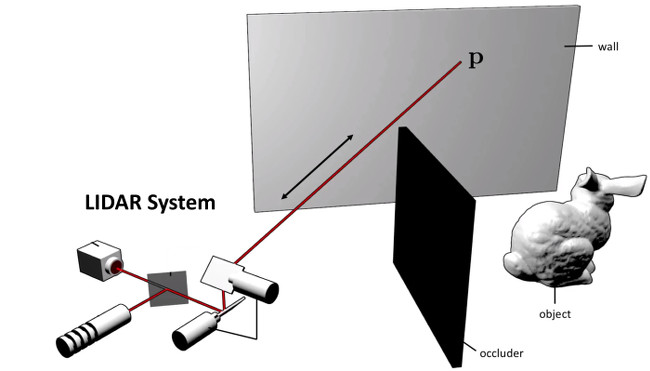

This process is repeated around a particular point (p in the image above) to obtain as much reflected light as possible. The reflected light is then analysed and any objects on the other side of the occlusion are attempted to be reconstructed.

This is still an open area of research with many assumptions (e.g. that light is not reflected multiple times by the occluded object but bounces straight back to the wall and then the sensors) but the work on this done so far is quite intriguing.

Current Research into NLOS

The paper that I came across is entitled “Confocal non-line-of-sight imaging based on the light-cone transform“. It was published in March of last year in the Nature journal (555, no. 7696, p. 338). Nature is one of the world’s top and most famous academic journals, so anything published there is more than just world-class – it’s unique and exceptional.

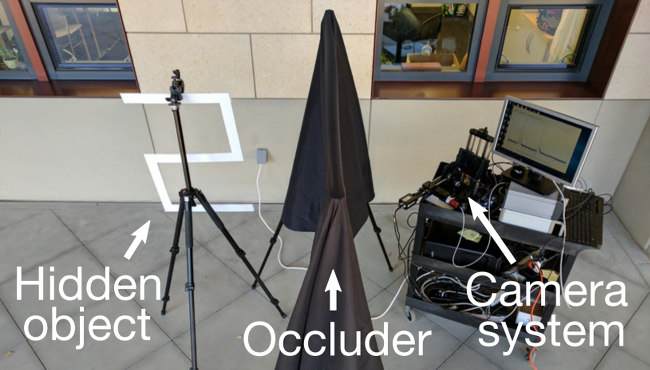

The experiment setup from this paper was as shown here:

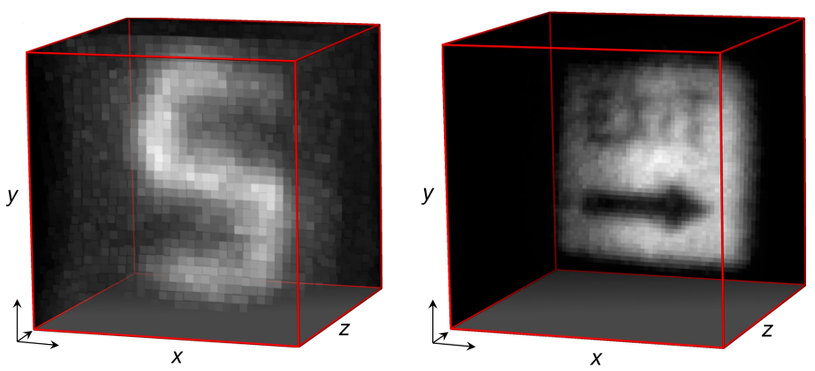

The idea, then, was to try and reconstruct anything placed behind the occluder by bouncing laser light off the white wall. In the paper, two objects were scrutinised: an “S” (as shown in the image above) and a road sign. With a novel method of reconstruction, the authors were able to obtain the following reconstructed 3D images of the two objects:

Remember, these results are obtained by bouncing light off a wall. Very interesting, isn’t it? What’s even more interesting is that the text on the street sign has been detected as well. Talk about precision! You can clearly see how one day, this could come in handy with autonomous cars who could use information such as this to increase safety on the roads.

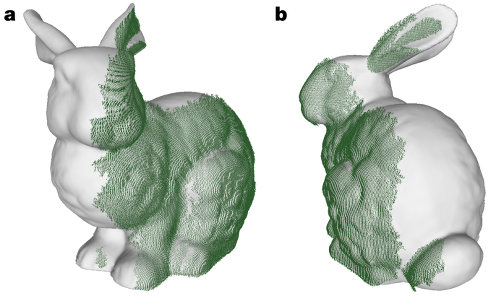

A computer simulation was also created to ascertain with dexterity the error rates involved with the reconstruction process. The simulated setup was as shown in the above images with the bunny rabbit. The results of the simulation were as follows:

The green in the image is the reconstructed parts of the bunny superimposed on the original object. You can clearly see how the 3D shape and structure of the object is extremely well-preserved. Obviously, the parts of the bunny not visible to the laser could not be reconstructed.

Summary

This post introduced the field of non-line-of-sight imaging, which is, in a nutshell, research that helps you see around corners. The idea behind NLOS is to use sensors like LIDAR to bounce laser light off walls and then read back any reflected light. The scene behind an occlusion is then attempted to be reconstructed.

Recent results from state-of-the-art research in NLOS published in the Nature journal were also presented in this post. Although much more work is needed in this field, the results are quite impressive and show that NLOS could one day be very useful with, for example, autonomous cars who could use information such as this to increase safety on the roads.

To be informed when new content like this is posted, subscribe to the mailing list: