I was rewatching “Bourne Identity” the other day. I love that film so much! Heck, the scene at the end is one of my favourites. Jason Bourne grabs a dead guy, jumps off the top floor landing, and while falling shoots a guy square in the middle of the forehead. He then breaks his fall on the dead body he took down with him and walks away the coolest guy on the block. That has to be one of the best scenes of all time in the action genre.

But there’s one scene in the film that always makes me throw up a little in my mouth. It’s the old “Just enhance it!” scene (minute 31 of the movie) and something we see so often in cinemas: people scanning security footage and zooming in on a face or vehicle registration plate; when the image becomes blurry they request for the blur to dissipate. “Enhace it!”, they cry! The IT guy waves his wand and presto!, we see a full resolution image on the screen. Stuff like that should be on Penn & Teller: Fool Us – it’s real magic.

But why is enhancing images as shown in movies so ridiculous?

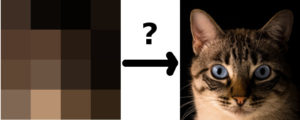

Because you are requesting the computer to create new information, i.e. new data for the extra pixels that you are generating. Let’s say you zoom in on a 4×4 region of pixels (as shown below) and want to perform facial recognition on it. You then request for this region to be enhanced. This means you are requesting more resolution. So, we’re moving from a resolution of 4×4 to, say, 640×480. How on earth is the computer supposed to infer what the additional 307,184 pixels are to contain? It can guess (which is what recent image generating applications do) but that’s about it.

Where is the additional information going to come from?

The other side to the story

However! Something happened at work that made me realise that the common “Enhance” scenario may not be as far-fetched as one would initially think. A client came to us a few weeks ago requesting that we perform some detailed video analytics of their security footage. They had terabytes of the stuff – but, as is so often the case, the sample video provided to us wasn’t of the best quality. So, we wrote back to the client stating the dilemma and requested that they send us better quality footage. And they did!

You see, they compressed the video footage initially in order for it to be sent over the Internet quickly. And here is where the weak link surfaces: transferring of data. If they could have sent the full uncompressed video easily, they would have.

Quality vs transmission restraints

So, back to Hollywood. Let’s say your security footage is recording at some mega resolution. This image of the Andromeda Galaxy released by NASA (taken from its Hubble Space Telescope) has a resolution of 69536 x 22230px. That’s astronomical (pun intended)! At that resolution, the image is a whopping 4.3GB in size. This, however, means that you can keep zooming in on a planet until you do get a clear picture of an alien’s face.

But let’s assume the CIA, those bad guys chasing Bourne, have similar means at their disposal (I mean, who knows what those people are capable of, right!?). Now, let’s say their cameras have a frame rate of 30 frames/sec, which is relatively poor for the CIA. That means that for each second of video you need 129GB of storage space. A full day of recording would require you to have over 10 petabytes of space (I’m abstracting over compression techniques here, of course). And that’s just footage from one camera!

It’s possible to store video footage of that size – Google cloud storage capacities are through the roof. But the bottleneck is the transferring of such data. Imagine if half a building was trying to trawl through security footage in its original form from across the other side of the globe. It’s just not feasible.

The possible scenario

See where I’m going with this? Here is a possible scenario: initially, security footage is sent across the network in compressed form. People scan this footage and then when they see something interesting, they zoom in and request the higher resolution form of the zoomed in region. The IT guy presses a few keys, waits 3 seconds, and the image on the screen is refreshed with NASA quality resolution.

Boom!

Of course, additional infrastructure would be necessary to deal with various video resolutions but that is no biggie. In fact, we see this idea being utilised in a product all of us use on a daily basis: Google Maps. Each time you zoom in, the image is blurry and you need to wait for more pixels to be downloaded. But initially, low resolution images are transferred to your device to save on bandwidth.

So, is that what’s been happening all these years in our films? No way. Hollywood isn’t that smart. The CIA might be, though. (If not, and they’re reading this: Yes, I will consider being hired by you – get your people to contact my people).

Summary

The old “enhance image” scene from movies may be annoying as hell. But it may not be as far-fetched as things may initially seem. Compressed forms of videos could be sent initially to save on bandwidth. Then, when more resolution is needed, a request can be sent for better quality images.

(Note: If this post is found on a site other than zbigatron.com, a bot has stolen it – it’s been happening a lot lately)

To be informed when new content like this is posted, subscribe to the mailing list: