Facial recognition. What a hot topic this is today. A week hardly goes by without it making the news in one way or another. This technology seems to be infiltrating more and more of our everyday lives: from ID verification on our phones (to unlock them) to automated border processing systems at airports. In some ways this is a good thing but in some this is not. The most controversial aspect of the growing ubiquity of facial recognition technology (FRT) is arguably the erosion of privacy. The more that FRT is used in our lives, the more it seems that we are turning into a highly monitored society.

This erosion of privacy is such a foremost issue to some that three cities in the USA have banned the use of FRT: San Francisco and Oakland in California and Somerville in Massachusetts. These bans, however, only affect city agencies such as police departments. Portland, Oregan, on the other hand, may soon be introducing a bill that could also cover private retailers and airlines. Moreover, according the Mutale Nkonde, a Harvard fellow and AI policy advisor, a federal ban could be around the corner.

FRT is undoubtedly controversial with respect to the debate on privacy.

In this post I would like to introduce to you a paper from the International Conference on Computer Vision 2019 (ICCV) that attempts to provide that little bit of additional privacy in our lives by proposing a fast and impressive method to de-identify videos in real-time. The de-identification process is purported to be effective against machines rather than humans such that we are still able to perceive the original identity of the speaker in the resulting video.

(TL;DR: jump to the end to see the results generated by the researchers. It’s quite impressive.)

The paper in question here is entitled “Live Face De-Identification in Videos” by Gafni et al. published by the Facebook Research Group (isn’t it ironic that Facebook is writing academic papers on privacy?).

The de-identification algorithm itself is a bit tricky to explain but I’ll do my best. First, an adversarial autoencoder network is paired with a trained facial classifier. An autoencoder network (which is a special case of the encoder-decoder architecture) works by imposing a bottleneck in the neural network, which forces the network to learn a compressed representation of the original input image. (Here’s a fantastic video explaining what this means exactly). So, what happens is that a compressed version of your face is generated – but the important aspects such as your pose, lip positioning, expression, illumination conditions, any occlusions, etc. are all retained. What is discarded are the identifying elements. This retaining/discarding is controlled by having a trained facial classifier nearby that the autoencoder tries to fool. During training, the autoencoder gets better and better at fooling the facial classifier by learning to more effectively discard identifying elements from faces, while retaining the important aspects of them.

What results is a face that is still easily recognisable to us, an algorithm that works in real-time (meaning that you can “turn it on” for skype sessions, for example) and one that doesn’t need to be retrained for each particular face – it just works out of the box for all faces.

Here is a video released by the authors of the paper showing some of their results:

Very impressive, if you ask me! Remember, the generated faces in the video have been de-identified, meaning that a facial recognition algorithm (FaceNet or ArcFace, for example) will find it extremely difficult to deal with. In fact, experiments were performed to see how well the researchers’ algorithm performs against popular FRTs. For one experiment, FaceNet was tested on images before and after de-identification. The true positive rate for one dataset dropped from almost 0.99, to less than 0.04. Very nice, indeed.

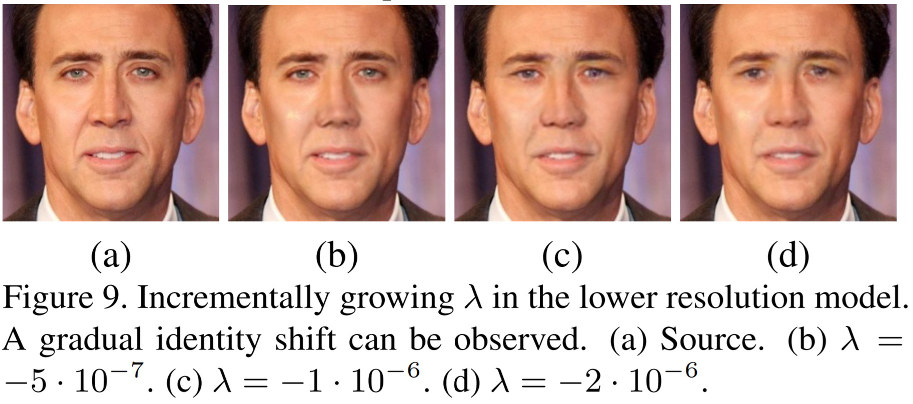

Moreover, the paper also goes into detail on a number of key steps of their algorithm. One of them is the de-identification distance from the original image. That is, they play around a bit with how much a person’s face is de-identified. The image below shows Nicholas Cage being gradually anonymised by increasing a variable in the algorithm. This is also something quite interesting.

Summary

In this post I presented a paper from the Facebook Research Group on the de-identification of faces in real-time. In the context of FRTs and the current hot debate on privacy, this is an important piece of work, especially considering the fact that this algorithm is getting impressive results and works in real-time. Whether we will see this technology in use in the near future is hard to say, but I wouldn’t be surprised if a de-identification app that works much like the face swap filter on instagram becomes available to the general public. There is certainly a demand for it.

To be informed when new content like this is posted, subscribe to the mailing list (or subscribe to my YouTube channel!):