Computer vision is a fascinating area in which to work and perform research. And, as I’ve mentioned a few times already, it’s been a pleasure to witness its phenomenal growth, especially in the last few years. However, as with pretty much anything in the world, contention also plays a part in its existence.

In this post I would like to present 2 very recent events from the world of computer vision that have recently caused controversy:

- A judge’s ruling that Facebook must stand trial for its facial recognition software

- Uber’s autonomous car death of a pedestrian

Facebook and Facial Recognition

This is an event that has seemingly passed under the radar – at least for me it did. Probably because of the Facebook-Cambridge Analytica scandal that has been recently flooding the news and social discussions. But I think this is also an important event to mull over because it touches upon underlying issues associated with an important topic: facial recognition and privacy.

So, what has happened?

In 2015, Facebook was hit with a class action lawsuit (the original can be found here) by three residents from Chicago, Illinois. They are accusing Facebook of violating the state’s biometric privacy laws by the firm collecting and storing biometric data of each user’s face. This data is being stored without written notification. Moreover, it is not clear exactly what the data is to be used for, nor how long it will reside in storage, nor was there an opt-out option ever provided.

Facebook began to collect this data, as the lawsuit states, in a “purported attempt to make the process of tagging friends easier”.

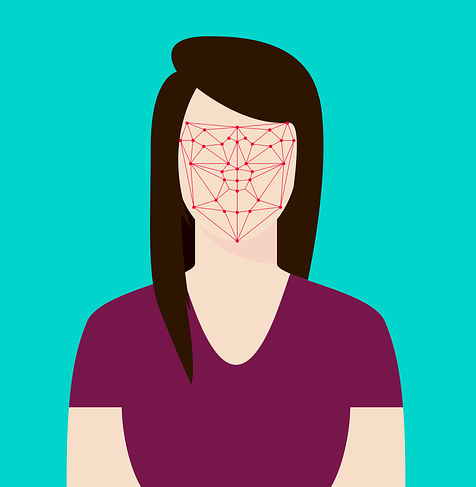

In other words, what Facebook is doing (yes, even now) is summarising the geometry of your face with certain parameters (e.g. distance between eyes, shape of chin, etc.). This data is then used to try to locate your face elsewhere to provide tag suggestions. But for this to be possible, the biometric data needs to be stored somewhere for it to be recalled when needed.

The Illinois residents are not happy that a firm is doing this without their knowledge or consent. Considering the Cambridge Analytica scandal, they kind of have a point, you would think? Who knows where this data could end up. They are suing for $75,000 and have requested a jury trial.

Anyway, Facebook protested over this lawsuit and asked that it be thrown out of court stating that the law in question does not cover its tagging suggestion feature. A year ago, a District Judge rejected Facebook’s appeal.

Facebook appealed again stating that proof of actual injury needs to be shown. Wow! As if violating privacy isn’t injurious enough!?

But on the 14th May, the same judge discarded (official ruling here) Facebook’s appeal:

[It’s up to a jury] to resolve the genuine factual disputes surrounding facial scanning and the recognition technology.

So, it looks like Facebook will be facing the jury on July 9th this year! Huge news, in my opinion. Even if any verdict will only pertain to the United States. There is still so much that needs to be done to protect our data but at least things seem to be finally moving in the right direction.

Uber’s Autonomous Car Death of Pedestrian

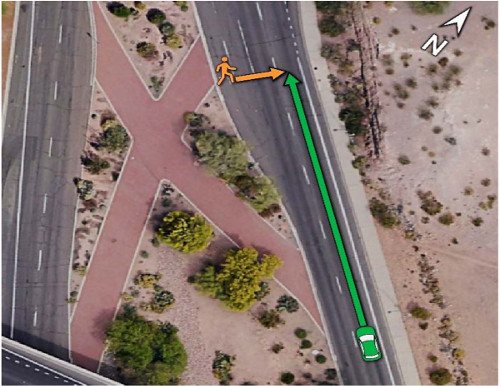

You probably heard on the news that on March 18th this year a woman was hit by an autonomous car owned by Uber in Arizona as she was crossing the road. She died in hospital shortly after the collision. This is believed to be the first ever fatality of a pedestrian in which an autonomous car was involved. There have been other deaths in the past (3 in total) but all of them have been of the driver.

3 weeks ago the US National Transportation Safety Board (NTSB) released its first report (short read) into this crash. It was only a preliminary report but it provides enough information to state that the self-driving system was at least partially at fault.

The report gives the timeline of events: the pedestrian was detected about 6 seconds before impact but the system had trouble identifying it. It was first classified as an unknown object, then a vehicle, then a bicycle – but even then it couldn’t work out the object’s direction of travel. At 1.3 seconds before impact, the system realised that it needed to engage an emergency braking maneuver but this maneuver had been earlier disabled to prevent erratic vehicle behaviour on the roads. Moreover, the system was not designed to alert the driver in such situations. The driver began braking less than 1 second before impact but it was tragically too late.

Bottom line is, if the self-driving system had immediately recognised the object as a pedestrian walking directly into its path, it would have known that avoidance measures would have needed to be taken – well before the emergency braking maneuver was called to be engaged. This is a deficiency of the artificial intelligence implemented in the car’s system.

No statement has been made with respect to who is legally at fault. I’m no expert but it seems like Uber will be given the all-clear: the pedestrian had hard drugs detected in her blood and was crossing in a non-crossing designated area of the road.

Nonetheless, this is a significant event for AI and computer vision (that plays a pivotal role in self-driving cars) because if these had performed better, the crash would have been avoided (as researchers have shown).

Big ethical questions are being taken seriously. For example, who will be held accountable if a fatal crash is deemed to be the fault of the autonomous car? The car manufacturer? The people behind the algorithms? One sole programmer who messed up a for-loop? Stanford scholars have been openly discussing the ethics behind autonomous cars for a long time (it’s an interesting read, if you have the time).

And what will be the future for autonomous cars in the aftermath of this event? Will their inevitable delivery into everyday use be pushed back?

Testing of autonomous cars has been halted by Uber in North America. Toyota has followed suit. And Chris Jones who leads the Autonomous Vehicle Analysis service at the technology analyst company Canalys, says that these events will set the industry back considerably:

It has put the industry back. It’s one step forward, two steps back when something like this happens… and it seriously undermines trust in the technology.

Furthermore, a former US Secretary of Transportation has deemed the crash a “wake up call to the entire [autonomous vehicle] industry and government to put a high priority on safety.”

But other news reports seem to indicate a different story.

Volvo, the make of car that Uber was driving in the fatal car crash, stated only last week that they expect a third of their cars sold to be autonomous by 2025. Other car manufacturers are making similar announcements. Two weeks ago General Motors and Fiat Chrysler unveiled self-driving deals with people like Google to push for a lead in the self-driving car market.

And Baidu (China’s Google, so to speak) is heavily invested in the game, too. Even Chris Jones is admitting that for them this is a race:

The Chinese companies involved in this are treating it as a race. And that’s worrying. Because a company like Baidu – the Google of China – has a very aggressive plan and will try to do things as fast as it can.

And when you have a race among large corporations, there isn’t much that is going to even slightly postpone anything. That’s been my experience in the industry anyway.

Summary

In this post I looked at 2 very recent events from the world of computer vision that have recently caused controversy.

The first was a judge’s ruling in the United States that Facebook must stand trial for its facial recognition software. Facebook is being accused of violating the Illinois’ biometric privacy laws by collecting and storing biometric data of each user’s face. This data is being stored without written notification. Moreover, it is not clear exactly what the data is being used for, nor how long it is going to reside in storage, nor was there an opt-out option ever provided.

The second event was the first recorded death of a pedestrian by an autonomous car in March of this year. A preliminary report was released by the US National Transportation Safety Board 3 weeks ago that states that AI is at least partially at fault for the crash. Debate over the ethical issues inherent to autonomous cars has heated up as a result but it seems as though the incident has not held up the race to bring self-driving cars onto our streets.

To be informed when new content like this is posted, subscribe to the mailing list (or subscribe to my YouTube channel!):