SIGGRAPH 2019 is coming to end today. SIGGRAPH, which stands for “Special Interest Group on Computer GRAPHics and Interactive Techniques”, is a world-renowned annual conference held predominantly for computer graphics researchers – but you do sometimes get papers from the world of computer vision being published there. In fact, I’ve presented a few such papers on this blog in the past (e.g. see here).

I’m not going to present any papers from this conference today. What I would like to do is mention a person who is being recognised at this year’s conference with a special award. Michael F. Cohen, the current Director of Facebook’s Computational Photography Research team, a few days ago received the 2019 Steven A. Coons Award for Outstanding Creative Contributions to Computer Graphics. This is an award given every two years to honour outstanding lifetime contributions to computer graphics and interactive techniques.

For the full, very impressive list of Michael’s achievements, see the SIGGRAPH award’s page. But there are a few that stand out. In particular his significant contributions to Facebook’s 3D photos feature and most interestingly (for me) his work on The Moment Camera.

You may recall that in March of this year I wrote about Smartphone Camera Technology from Google and Nokia. At the time, I didn’t realise that the foundations for the technologies I discussed there were laid down by Michael nearly 15 years ago.

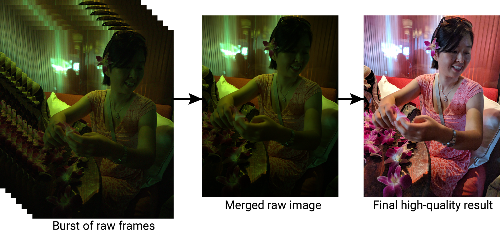

In that post I talked about High Dynamic Range (HDR) Imaging, which is a technique employed by some cameras to give you better quality photos. The basic idea behind HDR is to capture additional shots of the same scene (at different exposure levels, for instance) and then take what’s best out of each photo to create a single picture. For example, the image on the right below was created by a Google phone using a single camera and HDR technology. A quick succession of 10 photos (called an image burst) was taken of a dimly lit indoor scene. The final merged picture gives a vivid representation of the scene. Quite astonishing, really.

(image taken from here)

Well, Michael F. Cohen, laid out the basic ideas behind HDR for combining images/photos to create better pictures at the beginning of this century. For example, he along with Richard Szeliski published this fantastic paper in 2006. In it he talks about the idea of capturing a moment rather than an image. Capturing a moment is a much better description of what HDR is all about!

The abstract to the paper says it best:

Future cameras will let us “capture the moment,” not just the instant when the shutter opens. The moment camera will gather significantly more data than is needed for a single image. This data, coupled with automated and user-assisted algorithms, will provide powerful new paradigms for image making.

Ah, the moment camera. What a good name for HDR-capable phones!

It’s interesting to note that it has taken a long time for the moment camera to become available to the general public. I would guess that we just had to wait for faster CPUs on our phones for Michael’s work to become a reality. However, some features of the “moment camera” described in the 2006 paper are yet to be implemented in our HDR-enabled phones. For example, this idea of a group shot being improved by image segmentation:

Anyway, a well-deserved lifetime achievement award, Michael. And thank you for the “moment camera”.

To be informed when new content like this is posted, subscribe to the mailing list (or subscribe to my YouTube channel!):