Data mining is a big business. Everyone is analysing mouse clicks, mouse movements, customer purchase patterns. Such analysis has proven to give profitable insights that are driving businesses further than ever before.

But not many people have considered data mining videos. What about all that security footage that has stacked up over the years? Can we mine those for profitable insights also? Of course!

In this blog post I’m going to present a task that video analytics can do for you: the plotting of tracked customers or staff from video footage onto a 2D floor plan.

Why would you want to do this? Well, plotting on a 2D plane will allow you to more easily data mine for things such as common movement patterns or common places of congestion at particular times of the day. This is powerful information to possess. For example, if you can deduce what products customers reached for in what order you can make important decisions with respect to the layout of your shelves and placement of advertising.

Another benefit of this technique is that it is also much easier to visualise movement patterns presented on 2D plane rather than when shown on distorted CCTV footage. (In fact, in my next post I extend what I present here by showing you how to generate heatmaps from your tracking data – check it out).

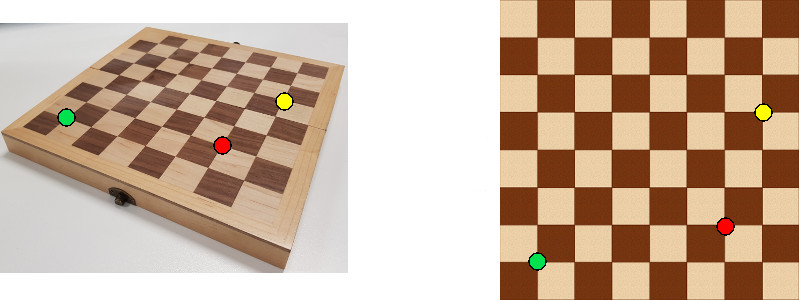

However, if you have ever tried to undertake this task you may have come to the understanding that it is not as straightforward as you initially thought. A major dilemma is that your security camera images are distorted. For example, a one pixel movement at the top of your image corresponds to a much larger movement in the real world than a one pixel movement at the bottom of your image.

Where to begin? In tackling this problem, the first thing to realise is that we are dealing with two planes in the Euclidean space. One plane (the floor in your camera footage) is “stretched out”, while the other is “laid flat”. We, therefore, need a transformation function to map points from one plane to the other.

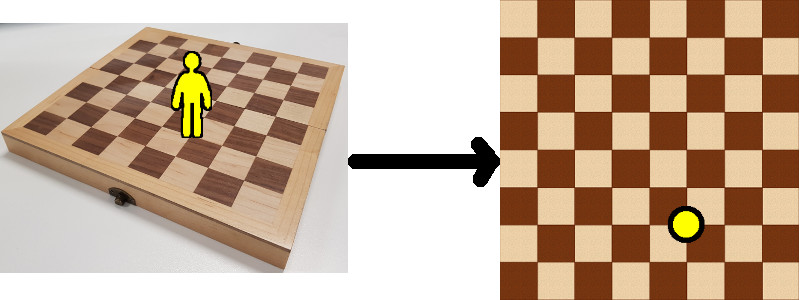

The following image shows what we are trying to achieve (assume the chessboard is the floor in your shop/business):

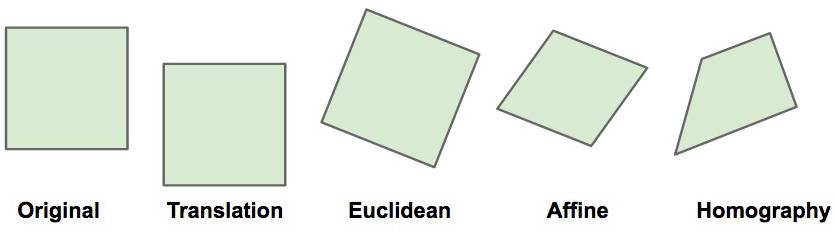

The next step, then, is to deduce what kind of transformation is necessary. Once we know this, we can start to look at the mathematics behind it and use this maths accordingly in our application. Here are some possible transformations:

Translations (the first transformation in the image above) are shifts in the x and y plane that preserve orientation. Euclidean transformations change the orientation of the plane but preserve the distances between points – definitely not our case, as mentioned earlier. Affine transformations are a combination of translation, rotation, scale, and shear. They can change the distances between points but parallel lines remain parallel after transformation – also not our case. Lastly, we have homographic transformations that can change a square into any form of a quadrilateral. This is what we are after.

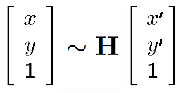

Mathematically, homographic transformations are represented as such:

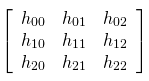

where (x,y) represent pixel coordinates in one plane, (x’, y’) represent pixel coordinates in another plane and H is the homography matrix represented as this 3×3 matrix:

Basically, the equation states this: given a point in one plane (x’,y’), if I multiply it by the homography matrix H I will get the corresponding point (x,y) from the other plane. So, if we calculate H, we can get the coordinates of any pixel from our camera image to the flat image.

But how do you calculate this magic matrix H? To gloss over some intricate mathematics, what we need is at least 4 point pairs (4 corresponding points) from the two images to get a minimal solution (a “close enough” solution) of H. But the more point pairs we provide, the better the estimate of H will be.

Getting the corresponding point pairs from our images is easy, too. You can use an image editing application like GIMP. If you move your mouse over an image, the pixel coordinates of your mouse positions are given at the bottom of the window. Jot down the pixel coordinates from one image and the corresponding pixel coordinates in the matching image. Get at least four such points pairs and you can then get an estimate of H and use it to calculate any other corresponding point pairs.

Now you can take the tracking information from your security camera and plot the position of people on your perspective 2D floor plan. You can now analyse their walking paths, where they spent most of their time, where congestion frequently occurs, etc. Nice! But what’s even nicer is the simple code needed to do everything discussed here. The OpenCV library (the best image/video processing library around) provides all necessary methods that you’ll need:

import cv2 # import the OpenCV library

import numpy as np # import the numpy library

# provide points from image 1

pts_src = np.array([[154, 174], [702, 349], [702, 572],[1, 572], [1, 191]])

# corresponding points from image 2 (i.e. (154, 174) matches (212, 80))

pts_dst = np.array([[212, 80],[489, 80],[505, 180],[367, 235], [144,153]])

# calculate matrix H

h, status = cv2.findHomography(pts_src, pts_dst)

# provide a point you wish to map from image 1 to image 2

a = np.array([[154, 174]], dtype='float32')

a = np.array([a])

# finally, get the mapping

pointsOut = cv2.perspectiveTransform(a, h)

Piece of cake. Here is a short animation showing what you can do:

Be sure to check out my next post where I show you how to generate heatmaps from the tracking data you just obtained from your security cameras.

To be informed when new content like this is posted, subscribe to the mailing list:

Hi Zig, how would you approach to get the localization when you have different heights in one image. For example, the surface of a table has a different homography than the floor plane, resulting in multiple planes?

Hi Sam. That’s a tricky one. Theoretically, having one homography you can work out the one for the table. But that involves complex maths and working with relationships between distances of things in the real images, stuff like that. The best option, I think, is to create another homography for the table. It won’t be as accurate because of the smaller space that the table occupies but it might just work for you. The better your resolution, the better your homography matrix will be. Good luck! Zig

Thanks for reply. I have problem with result. I converted input into the classical Cartesian system but I got negative numbers, I think it is hard to help me over comment… It is necessary to have both images in the same resolution? Thanks

Hi, it is possible to use it in case 2D Cartesian coordinate system with inverse y-axis ( that goes down to positive values)?

Thanks.

Hi Prema. I think I understand what you’re asking me. Yes, that shouldn’t be a problem as long as you remember to invert everything on the y-axis in the code above, too. Zig

Many thanks Zig, I found your article and focused unpacking of the problem statement very helpful. All the best from Cape Town, South Africa.

Thanks, Alex. Greetings from South Australia 🙂

When you print the pointsOut, you get following coordinates [[[205.69278 84.11977]]]

So the first point 154, 174 should be 212, 80 and not 205, 84.

Why does the Transformation do not get the right coordinates from identical points?

Hi Igor. Good question! The reason for this is that the point pairs are only estimates. We can’t get the exact location of each point (we would need super high resolution and provide pixel points with many, many decimal places). So, the matching point pairs are only rough, in this respect. Hence, the result (e.g. 212, 80) won’t be an exact match (i.e. 205, 84). However, if you provide more point pairs, the results should get more accurate. Zig

Hello,

thank you for great and useful contents yet very simple to undrestand!

I am a master student in Robotics and my dissertation is about camera surface mapping for feature detection, as there are so many sources available in computer vision and feature detection, I haven’t found a relatively good source about terrain mapping and camera surface mapping, the idea is to have a USB camera to be able to live stream data and create a 2D map and detect simple features of simple shapes and their size. Do you happen to know good sources regarding this project, which is bit more niche about camera surface mapping.

Kind regards

Hi Paria. Unfortunately, I can’t help you much here because I never worked in this particular field. Your best bet would be to look for academic papers (scholar.google.com) on this topic and then email the academics involved. Hope this helps. Zig

Hello, I am a college student who is distressed by my graduation design. I want to ask you to follow the designated person in the video. Can I mark different people in the video with python? Because everyone ’s characteristics are too similar, I ca n’t follow her stably

Hello Eris. I haven’t written anything on people tracking, yet. If you need help with this, I would strongly suggest you search around on PyImageSearch for what you’re looking for. Adrian Rosebrock’s blog is full of code that will help your project. Look for “object tracking”, “people tracking”, things like this. Zig

Hi Zig. An excellent article.

Could you forward the used images and code for generating the animations to my email – raks.097@gmail.com ?

Thank you

Hi. Any code I used for this article is long gone, unfortunately. I wrote it a while ago. Sorry about that. Zig

really helpful and seemed simple too. I have a problem where camera is placed right above the plane facing exactly downwards. Can I use the same homographic transformation or should i go with other transformations.

Thank you

Hi Ananth. If the image of the floor from your camera is the shape of any quadrilateral, then you can use the same homographic transformation. Otherwise, you will need to look at another (more complicated) transformation. Zig

After following the blog the concept seems clear enough to understand 🙂 however. I have a few things Im not clear on after reading this page?

I’ve copied the code above into a .py file. Im not yet clear as to what is supposed to happen when I run python3 file.py? (With file.py containing the code above)? At the moment it just compiles and thats it im back to the command prompt.

Are there image files that the program requires? Perhaps flag arguments I need to use?

Are there other associated blogs I need to see before running programs from your website here?

Is there anything I can explain better? How can I get the results like what the examples demonstrate?

Hi Joel, I’ll get in touch with you via email. Zig

Hey, How can I achieve results as per the animation.

please suggest me.

thank you

Hi. Thanks for commenting. It’s hard to answer this question without getting more details about the problems you are having. Please email me with these and hopefully I’ll be able to help you. Zig.