Who here watches Black Mirror? Oh, I love that show (maybe except for Season 4 – only one episode there was any good, IMHO). For those that haven’t seen it yet, Black Mirror basically tries to extrapolate what society will look like if technological advances were to continue on their current trajectory.

A few of the technologies presented in that show are science fiction on steroids. But I think that many are not and that it’s only a matter of time before we reach that (dark?) high-tech reality.

A reader of this blog approached me recently with a (preprint) academic publication that sounded pretty much like it had been taken out of that series. It was a paper purporting that scientists can now reconstruct our visual thoughts by analysing our brain scans.

“No way. Too good to be true”, was my first reaction. There has to be a catch. So, I went off to investigate further.

And boy was my mind blown. Allow me in this post to present to you that publication out of Kyoto, Japan. Try telling me at the end that you’re not impressed either!

(Note: Although this post is technically not directly related to computer vision, I’m still including it in my blog because it deals with trying to understand how our brains work when it comes to visual stimuli. This is important to do because many times in the history of computer vision and AI in fact, it has been shown that biologically inspired solutions (i.e. solutions that mimic the way we behave) can obtain really good if not superior results to other solutions. The prime example of this, of course, is neural networks)

Machines that can read our minds

The paper in question is entitled “Deep image reconstruction from human brain activity” (Shen et al., bioRxiv, 2017). Although it has been published in preprint, meaning that it hasn’t been peer-reviewed and technically doesn’t hold too much academic weight, the site that it was published on is of repute (www.biorxiv.org) as are the institutes that co-authored the work (ATR Computational Neuroscience Laboratories and Kyoto University, the latter being one of Asia’s highest ranked universities). Hence, I’m confident in the credibility of their research.

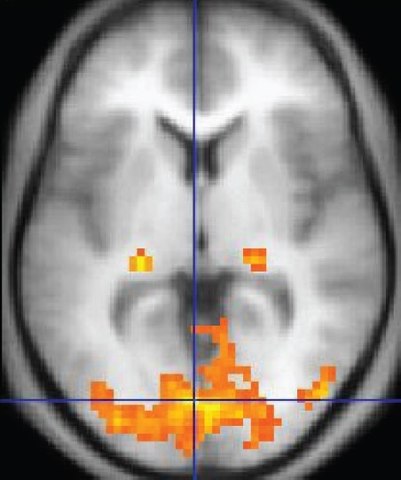

In a nutshell, the paper is claiming to have developed a way to reconstruct images that people are looking at or thinking about by solely analysing functional magnetic resonance imaging (fMRI). fMRI machines measure brain activity by detecting changes in blood flow through regions of the brain – generally speaking, the more blood flow, the more brain activity. Here’s an example fMRI image showing increased brain activity in orange/yellow:

Let’s look at some details of their paper.

The first step was to utilise machine learning to examine what the relationship is between brain activity as detected by fMRI scans and the images the were being shown to test subjects. What’s interesting is that there was a focus on mapping hierarchical features between the fMRI images and real images.

Hierarchical features is a way to deconstruct images into an ascending level of detail. For example, to describe a car you could start by describing edges, then working up to curves, and then particular shapes like circles, and then objects like wheels; and then finally you’d get four wheels, a chassis and some windows.

Deconstructing images by looking at hierarchical features (edges, curves, shapes, and so on) is also the way neural networks and our brains work with respect to visual stimuli. So, this plan of action to focus on hierarchical features in fMRI images seems intuitive.

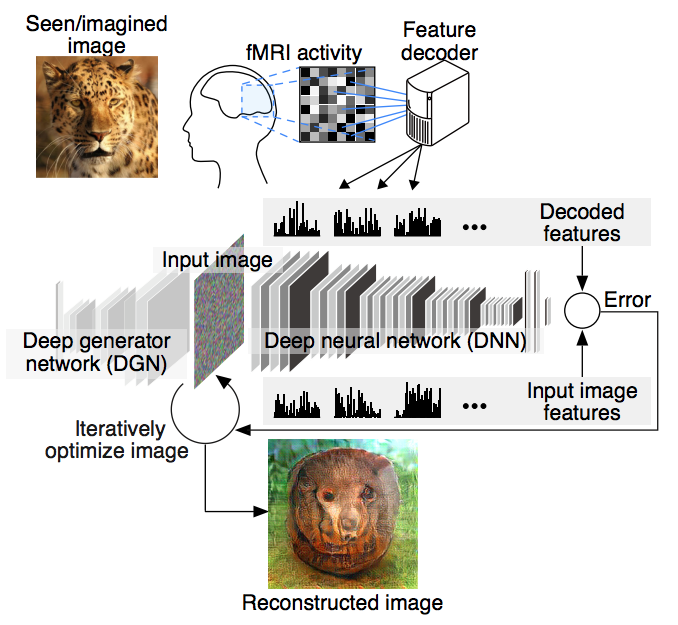

The hierarchical features extracted from fMRI images were then used to replace the features of a deep neural network (DNN). Next, an iterative process was started where base images (produced by a deep generator network) were fed into the DNN and at each iteration individual pixels of the image were transformed until the pixel values matched the features of the DNN (which were extracted from fMRI images) to a certain threshold (error) level.

It’s a complicated process, I know. I’ve tried to summarise it as best as I could here! To understand it more, you’ll need to go back to the original publication and then follow a trail of referenced articles. The image below shows this process – not that it helps much 🙂 But I think the most important part of this study are the results – that’s coming up in the next section.

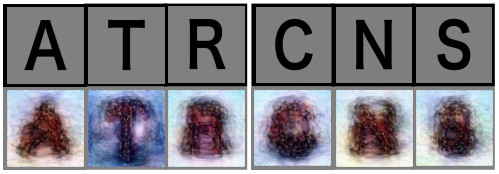

Test subjects were shown various images from three different classes: natural colour images (sampled from ImageNet), artificial geometrical shapes (in 8 colours), and black and white alphabetical letters. Scans of brain activity were conducted during the viewing sessions.

Interestingly, test subjects were also asked to recollect images they had been previously shown and brain scans during this task were also taken. The idea was to try to see if a machine could truly discern what a person was actually thinking rather than just viewing.

Results from the Study

This is where the fun begins!

But before we dive into the results, I need to mention that this is not the first time fMRI imaging has been used to attempt to reconstruct images. Similar projects (referenced in the publication we’re discussing) go back to 2011 and 2013. The novelty of this study, however, is to work with hierarchical features rather than working with fMRI images directly.

Take a look at this video showing past results from previous studies. The images are monochromatic and of minimal resolution:

Now let’s take a look at some results from this particular study. (All images are taken from the original publication.)

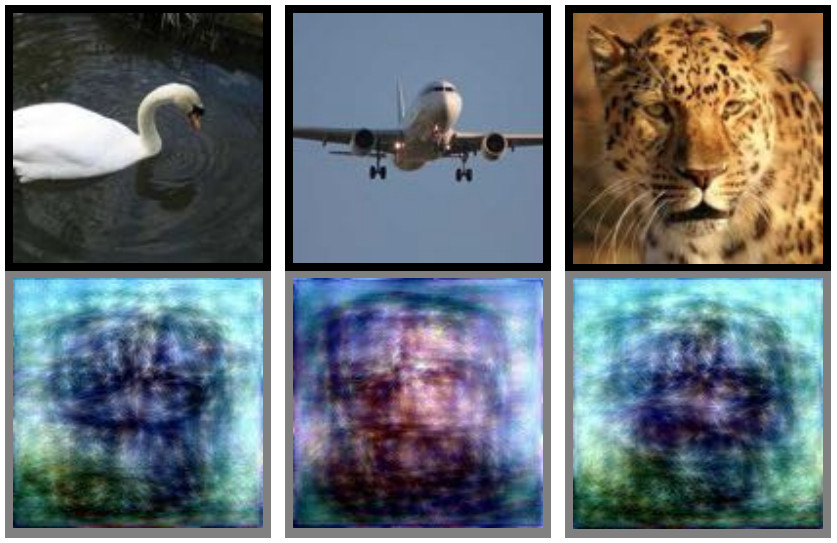

How about a reconstructed image of a swan? Remember, this reconstructed image (right) is generated from someone’s brain scan (taken while the test subject was viewing the original image):

You must be impressed with that!

Here’s a reconstructed picture of a duck:

For a presentation of results, take a look at this video released by the authors of the paper:

What about those letters I mentioned that were also shown to test subjects? Check this out:

Scary how good the reconstructions are, isn’t it? Imagine being able to tell what a person is reading at a given time!? I’ve already written a post about non-invasive lie detection from thermal imaging. If we can just work out how to get fMRI images like that (i.e. without engaging the subject), we’ll be able to tell what a person is looking at without them even knowing about it. Black Mirror stuff!

Results from the artificial geometric shapes images can be viewed here – also impressive, of course.

And how about reconstructed images from scans performed when people were thinking about the images they had been previously shown? Well, results here aren’t as impressive (see below). The authors concede this also. But hey! One step at a time, right?

Conclusion

In this post I presented a preprint publication in which it is purported that scientists were able to reconstruct visual thoughts by analysing brain scans. fMRI images were analysed for hierarchical features that were then used in a deep neural network. Base images were fed through this network and iteratively transformed until the features of each image matched the features in the neural network. Results from this study were presented in this post and I think we can all agree that they were quite impressive. This is the future, folks! Black Mirror stuff perhaps.

The uses for good of this technology are numerous – communicating with people in comas is one such use case. Of course, the abuse that could come from this is frightening, too. Issues with privacy (ah, that pesky perennial question in computer science!) spring to mind immediately.

But as you may have gathered from all my posts here, I look forward to what the future holds for us, especially with respect to artificial intelligence. And likewise with this technology. I can’t wait to see it progress.

To be informed when new content like this is posted, subscribe to the mailing list: