Ever thought about whether you could zoom in on someone’s eye in a photo and analyse the reflection on it? Read on to find out what research has done in this respect!

A previous post of mine discussed the idea of enhancing regions in an image for better clarity much like we often see in Hollywood films. While researching for that post I stumbled upon an absolutely amazing academic publication from 2013.

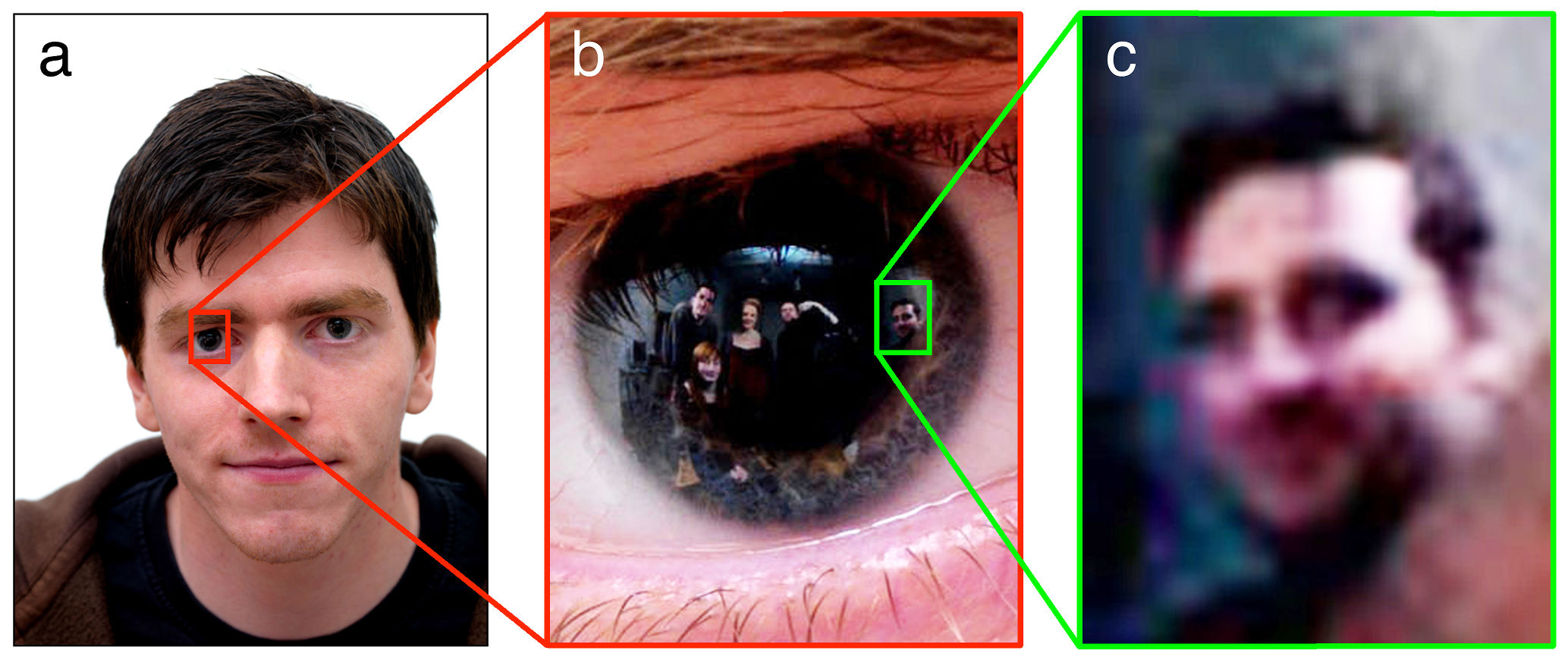

The publication in question is entitled “Identifiable Images of Bystanders Extracted from Corneal Reflections” (R. Jenkins & C. Kerr, PloS one 8, no. 12, 2013). In the experiments that Jenkins & Kerr performed, passport-style photographs were taken of volunteers while a group of bystanders stood behind the camera watching. The volunteers’ eyes were then zoomed in on and the faces of the onlookers reflected in the eyes were extracted, as shown in the figure below:

Freaky stuff, right!? Despite the fact that these reflections comprised only 0.5% of the initial image size, you can quite clearly make out what is reflected in the eye. The experiments that were performed also showed that the bystanders were not only visible but identifiable. Unfortunately, with a small population size for the experiments, this technically makes the results statistically insignificant (the impact factor of the journal in 2016 was 2.8, which speaks for itself) – but who cares?! The coolness factor of what they did is through the roof! Just take a look at a row of faces that they managed to extract from a few reflections captured by the cameras. Remember, these are reflections located on eyeballs:

With respect to interesting uses for this research the authors state the following:

our findings suggest a novel application of high-resolution photography: for crimes in which victims are photographed, corneal image analysis could be useful for identifying perpetrators.

Imagine a hostage taking a photo of their victim and then being recognised from the reflection in the victim’s eye!

But it gets better. When discussing future work, they mention that 3D reconstruction of the reflected scene could be possible if stereo images are combined from reflections from both eyes. This is technically possible (we’re venturing into work I did for my PhD) but you would need much higher resolution and detailed data of the outer shape of a person’s eye because, believe it or not, we each have a differently shaped eyeball.

Is there a catch? Yes, unfortunately so. I’ve purposely left this part to the very end because most people don’t read this far down a page and I didn’t want to spoil the fun for anyone 🙂 But the catch is this: the Hasselblad H2D camera used in this research produces images at super-high resolution: 5,412 x 7,216 pixels. That’s a whopping 39 megapixels! In comparison, the iPhone X camera takes pictures at 12 megapixels. And the Hasselblad camera is ridiculously expensive at US$25,000 for a single unit. However, as the authors state, the “pixel count per dollar for digital cameras has been doubling approximately every twelve months”, which means that sooner or later, if this trend continues, we will be sporting such 39 megapixel cameras on our standard phones. Nice!

Summary

Jenkins and Kerr showed in 2013 that extracting reflections on eyeballs from photographs is not only possible but faces on these reflections can be identifiable. This can prove useful in the future for police trying to capture kidnappers or child sex abusers who frequently take photos of their victims. The only caveat is that for this to work, images need to be of super-high resolution. But considering how our phone cameras are improving at a regular rate, we may not be too far away from the ubiquitousness of such technology. To conclude, Jenkins and Kerr get the Noble Prize for Awesomeness from me for 2013 – hands down winners.

To be informed when new content like this is posted, subscribe to the mailing list:

when will this software be available in public

You need to contact the people in charge of this project with this question. Zig

Hi, how can I get a program that I can install in my note 9 so I can enhance and clarify if its my husband in this woman eyes I’m seeing.

Hi. Chances are you won’t be able to do much with the photo considering that it would have been taken with a “standard” camera. The techniques described in this article used a high-end one. Zig

Hi, what about enhancing larger reflections like the headlight of a vehicle, where it possible to see the person taking the photo but not identify them without enhancing? Is that possible or would the same difficulties be present?

Hi. Yes, the same difficulties would be present. The photo would need to be taken in very high resolution. Zig.

Hello! I was wondering how to go about getting an image to you? I took a selfie in my backyard and I need the eye reflection cleared as much as possible so I can show what is in front of me. Please help!! The image is quite blurry and pixelated

For this technique to work the photo needs to have been taken in very high resolution. A blurry and pixelated image will not be enough, unfortunately. You just can’t create/extract information from nowhere, especially not blur (unlike hollywood movies make you believe). Zig

My child’s dad made a video recording him when he was about 4,5 months n i want to find out what was he doing while he was recrding my child? How much is it?

Chances nothing can be done with it. The recording would have had to have been made with a super good camera with high res. Those guys are really, really expensive. Sorry about that.

I trying to zoom in and see the reflection in my Son’s eyes, He passed away 2 days after this photo, can you help?

Hi Lynda. So sorry for your loss. You can only zoom in on somebody’s eye to see their reflections if the image was taken at a really good resolution – like the images in the article above. A special camera needs to be used for this. Chances are your photo was taken with a “standard” phone/digital camera. I’m sorry about this. Zig

Hey Zig I would like to develop the reflection from a video of victim to find out the culprits. Is it possible? Thank you.

Hi Sam. Like I say in my blog post, the only way to get a decent image of a reflection on an eye is to have images with high-enough resolution. That’s the catch! The article I discuss used photos with 5,412 x 7,216 resolution (39 megapixels), which is about 4 times the strength of a smartphone camera. If you have an image with very good resolution, you can zoom in on a person’s eye and get the reflection from it. This idea of zooming in to get better picture details can be understood with recently released images by NASA from their Hubble telescope: http://hubblesource.stsci.edu/sources/illustrations/ These image have 18,000 x 18,000 resolution, which means you can zoom in until the cows come home. Zig

I have a photo taken with the iPhone 10..and want to know if what I’m seeing is a reflection of a women, that I suspect my husband to have been spending time with the very day he send the picture please let me know if you can help me figure out if what I’m seeing is what I’m believing it is…

Hi Lucy, thanks for your comment. I sent you an email regarding your query. Zig

I also have a picture taken with partners phone through a truck windshield and though you can clearly see one female I can’t quite get him and the others to show as well CAN YOU HELP ME?

Hi Vickie. It all depends on the resolution of the image. Chances are you won’t be able to make much out of it considering the photo would have been taken with a “standard” camera. The techniques described in this article used a high-end one. Sorry about this. Zig.